- Brief Table of Contents (Not Yet Final)

- 1. Fundamentals of Events and Event Streams

- What’s an Event?

- What’s an Event Stream?

- The Structure of an Event

- Aggregating State from Keyed Events

- Materializing State from Entity Events

- Deleting Events and Event Stream Compaction

- The Kappa Architecture

- The Lambda Architecture

- Event Data Definitions and Schemas

- Powering Microservices with the Event Broker

- Selecting an Event Broker

- Summary

- 2. Fundamentals of Event-Driven Microservices

- 3. Designing Events

- 4. Integrating Event-Driven Architectures with Existing Systems

- What Is Data Liberation?

- Data Liberation Patterns

- Data Liberation Frameworks

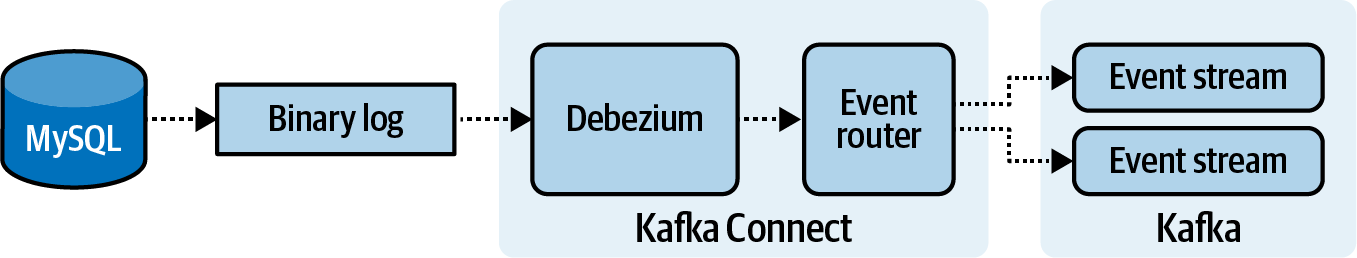

- Liberating Data Using Change-Data Capture

- Liberating Data with a Polling Query

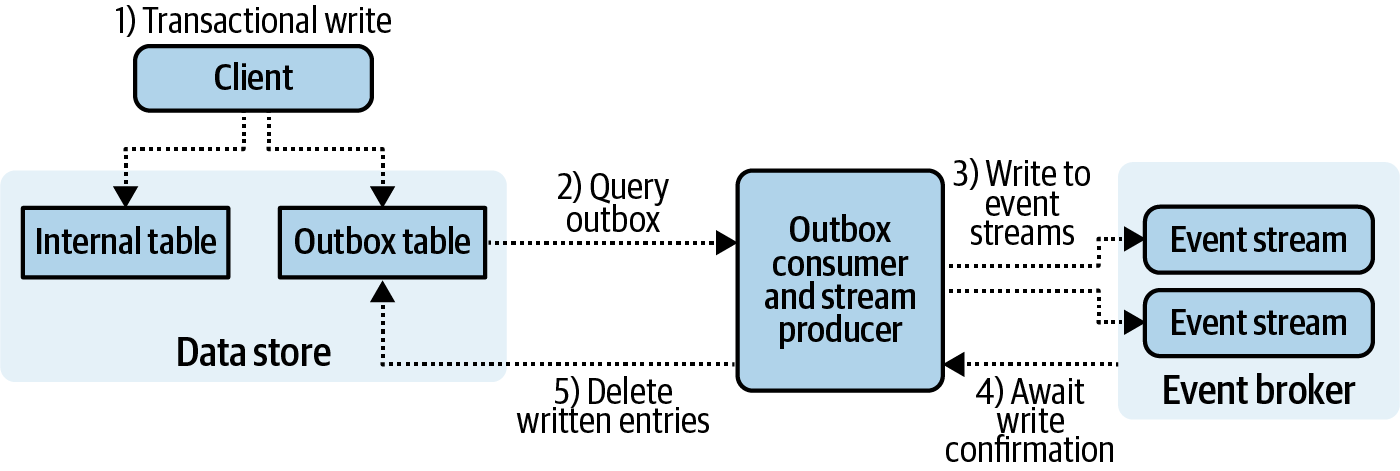

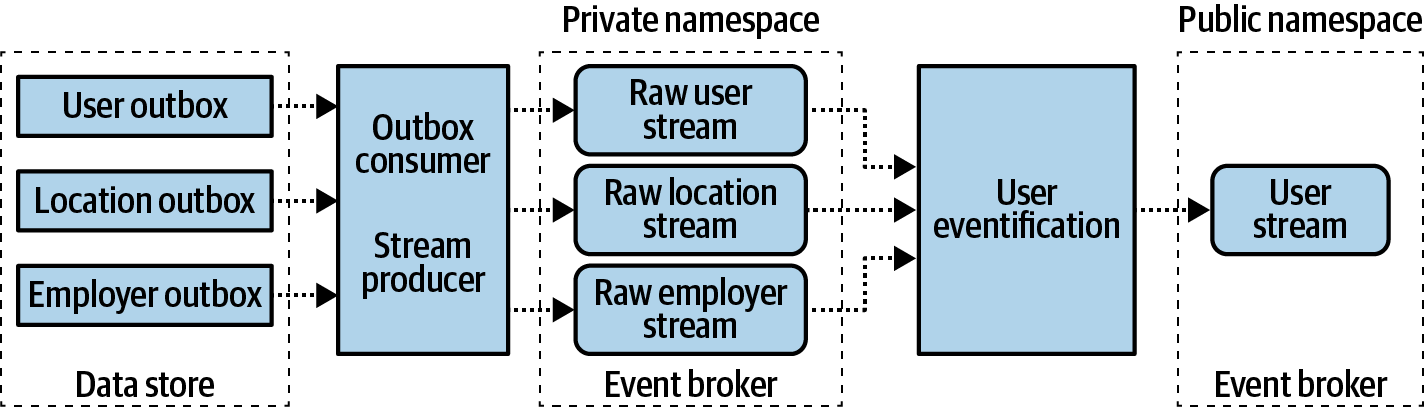

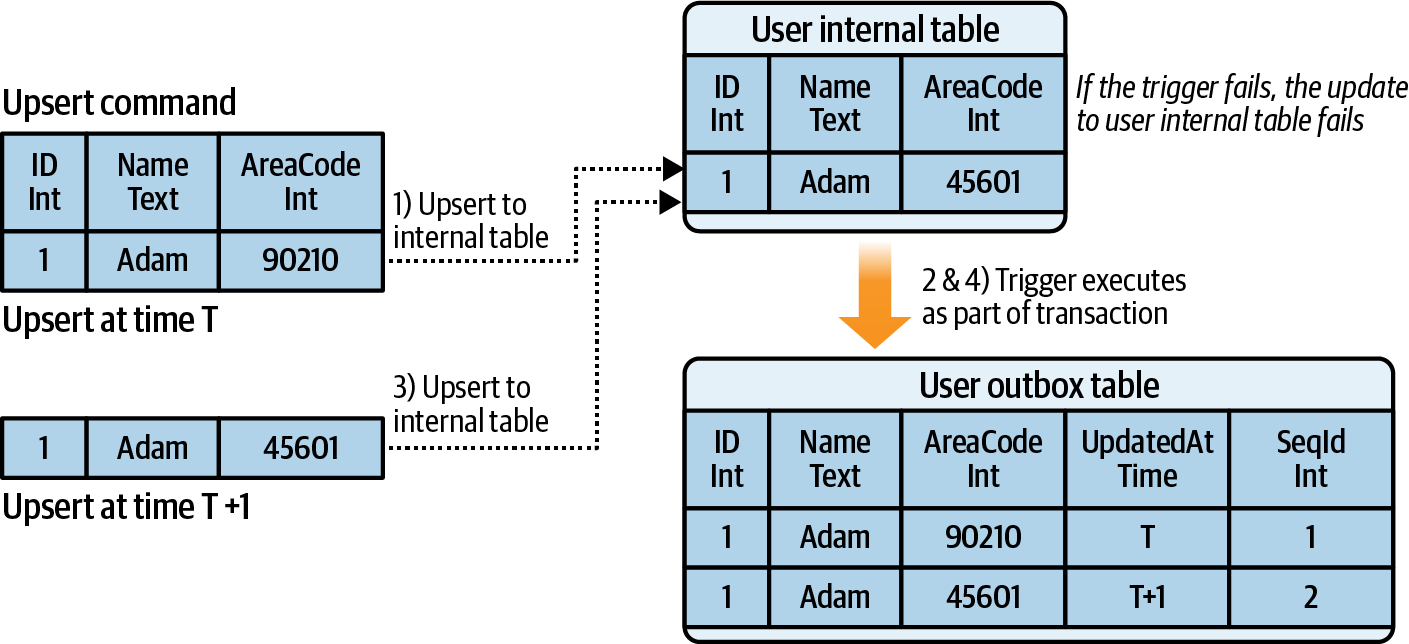

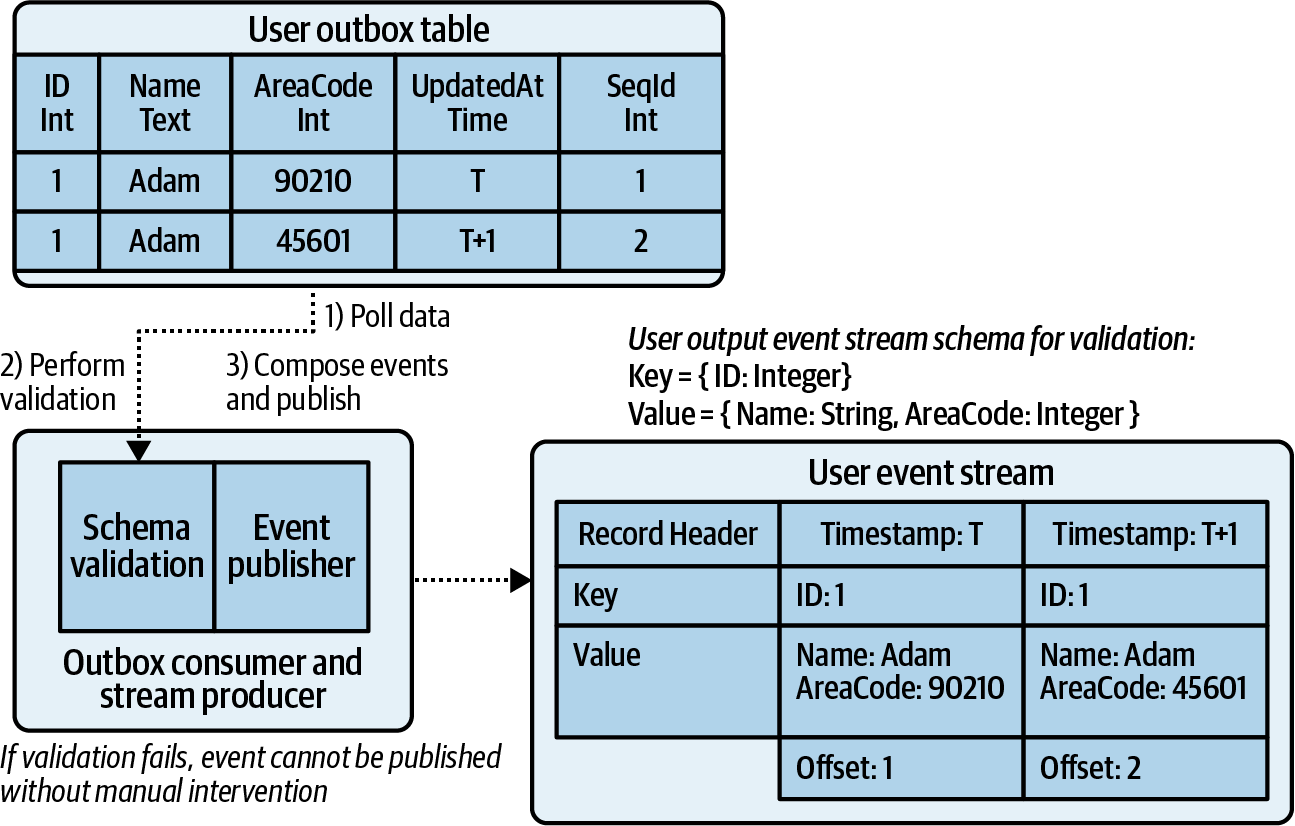

- Liberating Data Using Transactional Outbox Tables

- Making Data Definition Changes to Data Sets Under Capture

- Sinking Event Data to Data Stores

- The Impacts of Sinking and Sourcing on a Business

- Summary

Building Event-Driven Microservices

Leveraging Organizational Data at Scale

Adam Bellemare

Building Event-Driven Microservices

by Adam Bellemare

Copyright © 2025 Adam Bellemare. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

- Acquisitions Editor: Melissa Duffield

- Development Editor: Corbin Collins

- Production Editor: Clare Laylock

- Interior Designer: David Futato

- Cover Designer: Karen Montgomery

- Illustrator: Kate Dullea

- August 2020: First Edition

- September 2025: Second Edition

Revision History for the Early Release

- 2025-02-19: First Release

See http://oreilly.com/catalog/errata.csp?isbn=9781492057895 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Building Event-Driven Microservices, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

The views expressed in this work are those of the author, and do not represent the publisher’s views. While the publisher and the author have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the author disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

This work is part of a collaboration between O’Reilly and Confluence. See our statement of editorial independence.

979-8-341-62214-2

[LSI]

Brief Table of Contents (Not Yet Final)

Preface (unavailable)

Chapter 1. Why Event-Driven Microservices? (unavailable)

Chapter 2. Fundamentals of Events and Event Streaming

Chapter 3. Fundamentals of Event-Driven Microservices

Chapter 4. Schemas and Data Contracts (unavailable)

Chapter 5. Designing Events

Chapter 6. Integrating Event-Driven Architectures with Existing Systems

Chapter 7. Eventification and Denormalization (unavailable)

Chapter 8. Deterministic Stream Processing (unavailable)

Chapter 9. Stateful Streaming (unavailable)

Chapter 10. Building Workflows with Microservices (unavailable)

Chapter 11. Eventual Consistency and Convergence (unavailable)

Chapter 12. Basic Producer and Consumer Microservices (unavailable)

Chapter 13. Microservices Using Function-as-a-Service (unavailable)

Chapter 14. Heavyweight Framework Microservices (unavailable)

Chapter 15. Durable Execution Frameworks (unavailable)

Chapter 16. Lightweight Framework Microservices (unavailable)

Chapter 17. Streaming SQL Queries as Microservices (unavailable)

Chapter 18. Integrating Event-Driven and Request-Response Microservices (unavailable)

Chapter 19. The Event-Driven User-Experience (unavailable)

Chapter 20. Supportive Tooling (unavailable)

Chapter 21. Testing Event-Driven Microservices (unavailable)

Chapter 22. Deploying Event-Driven Microservices (unavailable)

Chapter 23. Conclusion (unavailable)

Chapter 1. Fundamentals of Events and Event Streams

Event streams served by an event broker tends to be the dominant mode for powerful event-driven architectures, though you’ll find that queues and ephemeral messaging also have a place. We’ll cover each of these modes in more detail in the second half of this chapter.For now, let’s now take a closer look at events, records, and messages, as well as the relationship between an event stream and an event broker.

What’s an Event?

An event can be anything that has happened within the scope of the business communication structure. Receiving an invoice, booking a meeting room, requesting a cup of coffee (yes, you can hook up a coffee machine to an event stream), hiring a new employee, and successfully completing arbitrary code are all examples of events that happen within a business. It is important to recognize that events can be anything that is important to the business. Once these events start being captured, event-driven systems can be created to harness and use them across the organization.An event is a recording of what happened, much like how an application’s information and error logs record what takes place in the application.

Unlike these logs, however, events are also the single source of truth, as covered in [Link to Come]. As such, they must contain all the information required to accurately describe what happened.Events use schemas, which is covered in more detail in [Link to Come]. For now, consider events to have well-defined field names, types, and default values.

I avoid using the term message when discussing event streams and event-driven architectures. It’s an overloaded term that has different meanings for different people, and is also heavily influenced by the technology you’re using. Think about what messages mean in our day-to-day life, particularly if you use instant messaging. One person sends a message to a specific person or to a specific private group. Additionally, the messages may or may not be durable - some chat applications delete the messages after read, others after a period of time.

Events in an event-driven architecture are more akin to a post that you publish to a social media platform or message board. The posting is public to all, and everyone is free to read it and use it as they see fit. More than one person can read the post, and of course the post can be read again and again. It isn’t deleted just because it’s old. Event streams are effectively a broadcast to share important data, letting others subscribe to it and use it however they see fit.

Just to be clear, you can use event streams to send messages. All messages are events, but not all events are messages. But for clarity’s sake, I’ll use the terms event and record instead of message for the remaining chapters of this book. But before we dig further into events, let’s take a brief look at the event stream.

What’s an Event Stream?

An event stream is durable and append-only immutable log. Records are added to the end of the log (the tail) as they are published by the producer. Consumers begin at the start of the log (the head) and consume records at their own processing rate.

In its most basic form, an event stream is a time-stamped sequence of business facts pertaining to a domain. Events form the basis for communicating important business data between domains reliably and repeatably.

Event streams have several critical properties that enable us to rely on them for event-driven microservices, and as the basis for effective inter-domain data communication as a whole. For clarity, and with a bit of repetition, these properties include:

- Immutability

-

Events cannot be modified once written to the log. The contents cannot be altered, nor can their offset position, timestamp, or any other associated metadata. You may only add new events.

- Partition Support

-

Partitions provide the means for supporting massive datasets. A consumer can subscribe to one or more paritions from a single event stream, allowing multiple instances of a single microservice to consume and process the stream in parallel.

- Indexed

-

Events are assigned an immutable index when written to the log. The index, also often called an offset, uniquely identifies the event.

- Strictly Ordered

-

Records in an event stream partition are served to clients in the exact same order that it was originally published.

- Durability and replayability

-

Events are durable. They can be consumed either immediately or in the future. Events can be replayed by new and existing consumers alike, provided the event broker has sufficient storage to host the historical data. Events are not deleted once they are read, nor are they simply discarded in the case of an absence of consumers.

- Indefinite Storage Support

-

You can retain all events in your stream for as long as necessary. There is no forced expiry or time-limited retention, allowing you to consume and reconsume events as often as you need.

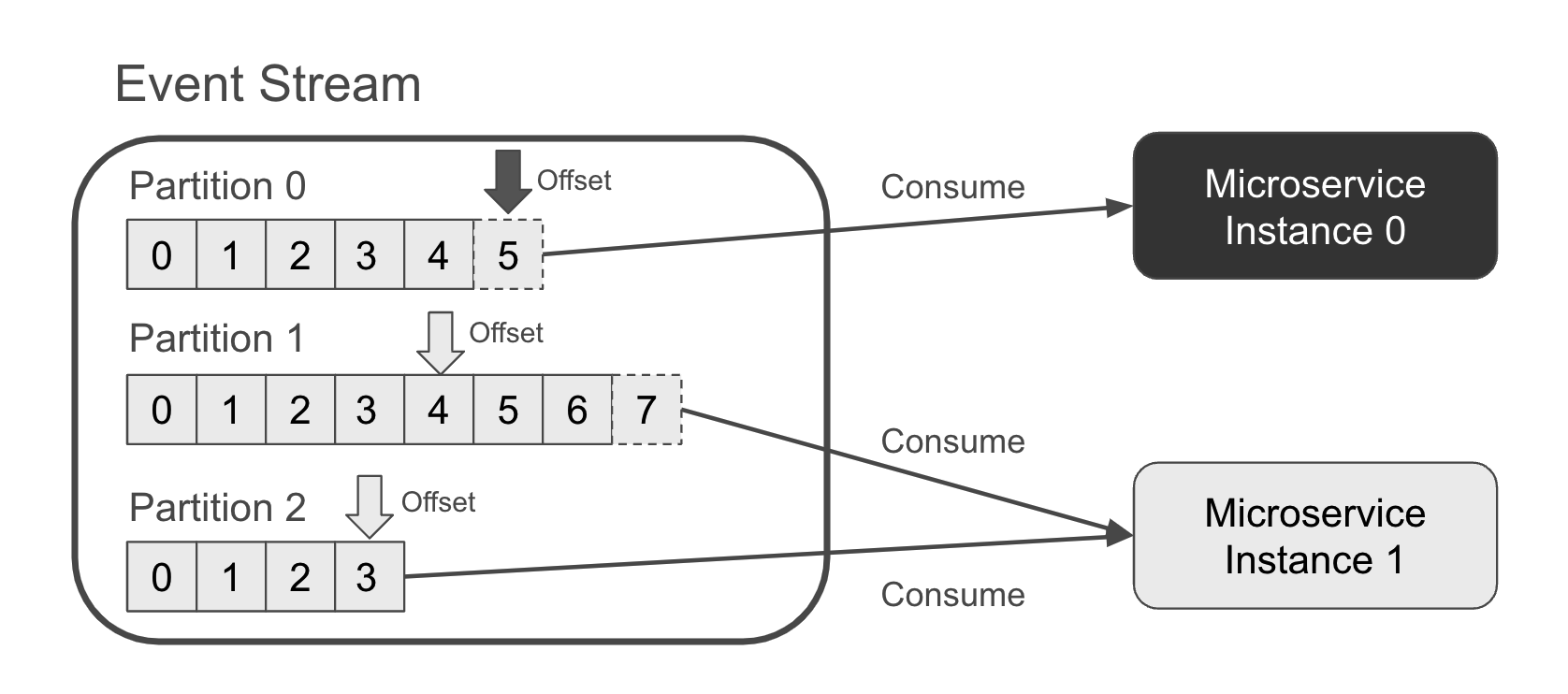

Figure 1-1 shows an event stream with three partitions. New events have just been appended to partition 0 (offset 5) and partition 1 (offset 7). The microservice consuming these events has two instances. Instance 0 is consuming only partition 0, which instance 1 is consuming both partition 1 and partition 2.

Figure 1-1. An event stream with two microservice instances consuming from a three partitions

With sufficient processing power, the consuming service will remain up to date with the event stream. Meanwhile, a new consumer beginning at an earlier offset (or the head) will need to process all of the events to catch up to current time.

Event streams are hosted on an event broker, with one of the most popular (and de-facto standard) being Apache Kafka. The event broker, such as in the case of Kafka, provides a structure known as a topic that we can write our events to. It also handles everything from data replication and rebalancing to client connections and access controls. Publishers write events to the event stream hosted in the broker, while consumers subscribe to event streams and receive the events.

Unfortunately, due to a long and often messy history, event brokers have often been confused with ephemeral messaging and queues. Each of these three options is different from the others. Let’s take a deeper look at each, and why event streams form the backbone of a modern event driven architectures.

Ephemeral Messaging

A channel is an ephemeral substrate for

communicating a message between one producer and one or more subscribers. Messages are directed to specific consumers, and they are not stored for any significant length of time, nor are they written to durable storage by the broker. In the case of a system failure or a lack of subscribers on the channel, the messages are simply discarded, providing at-most-once delivery. NATS.io Core (not JetStream) is an example of this form of implementation.Figure 1-2 shows a single producer sending messages to the ephemeral channel within the event broker. The ephemeral messages are then passed on to the currently subscribed consumers. In this figure, Consumer 0 obtains messages 7 and 8, but Consumer 1 does not because it is newly subscribed and has no access to historical data. Instead, Consumer 1 will receive only message 9 and any subsequent messages.

Figure 1-2. An ephemeral message-passing broker forwarding messages

Ephemeral communication lend itself well to direct service-to-service communication with low overhead. It is a message-passing architecture, and is not to be confused with a durable publish-subscribe architecture as provided by event streams.

Message-passing architectures provides point-to-point communication between systems that don’t necessarily need at-least-once delivery and can tolerate some data loss. As an example, the online dating application Tinder uses NATS to notify users of updates. If the message is not received, not a big deal—a missed push notification to the user only has a minor (though negative) effect on the user experience.

Ephemeral message-passing brokers lack the necessary indefinite retention, durability, and replayability of events that we need to build event-driven data products. Message-passing architectures are useful for event-driven communication between systems for current operational purposes but are completely unsuited for providing the means to communicate data products.

Queuing

A queue is a durable sequence of stored records awaiting processing. It is fairly common to have multiple consumers that asynchronously (and competitively) select, process, and acknowledge records on a first-come, first-served basis. This is one of the major differences when compared to event streams, which use partition-exclusive subscriptions and strict in-order processing.

A work queue is a common use cases. The producer publishes “work to do” records, while consumers dequeue records, processes them, then signals to the queue broker that the work is complete. The broker then typically deletes the processed records, which is the second major difference when compared to the event stream, as the latter retains the records as long as specified (including indefinitely).

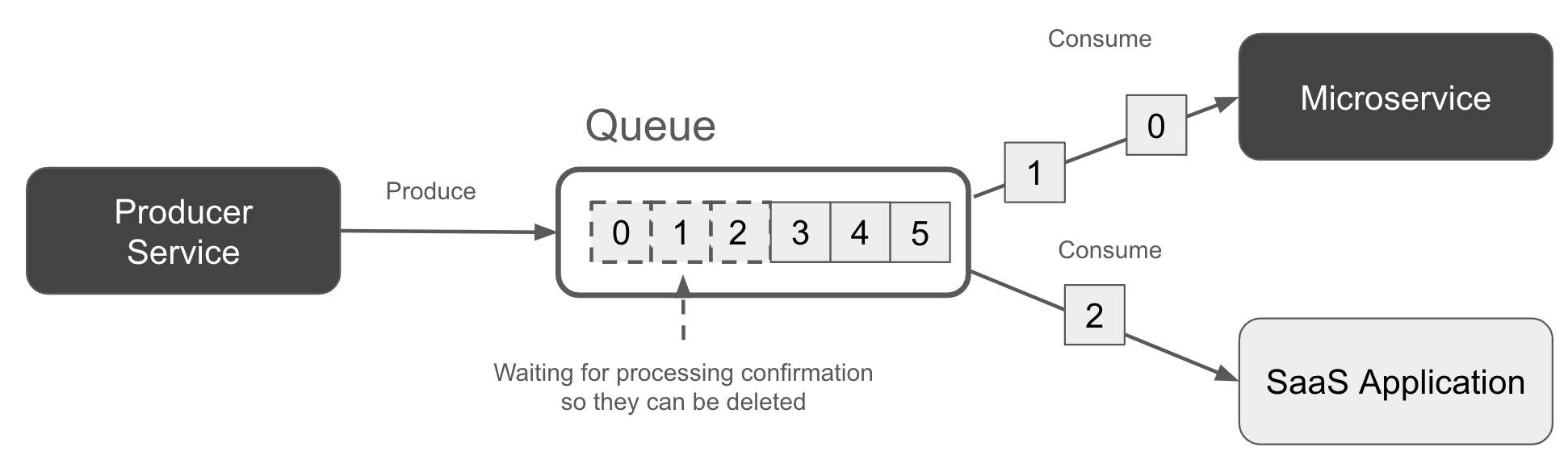

Figure 1-3 shows two subscribers consuming records from a queue in a round-robin manner. Note that the queue contains records currently being processed (dashed lines) and those yet to be processed (solid lines).

Figure 1-3. A queue with two subscribers each processing a subset of events

Queues typically provide “at-least once” processing guarantees. Records may be proccessed more than once, particularly if a subscriber fails to commit its progress back to the broker, say due to a crash, after processing a record. In this case, another subscriber may pick up the record and process it again.

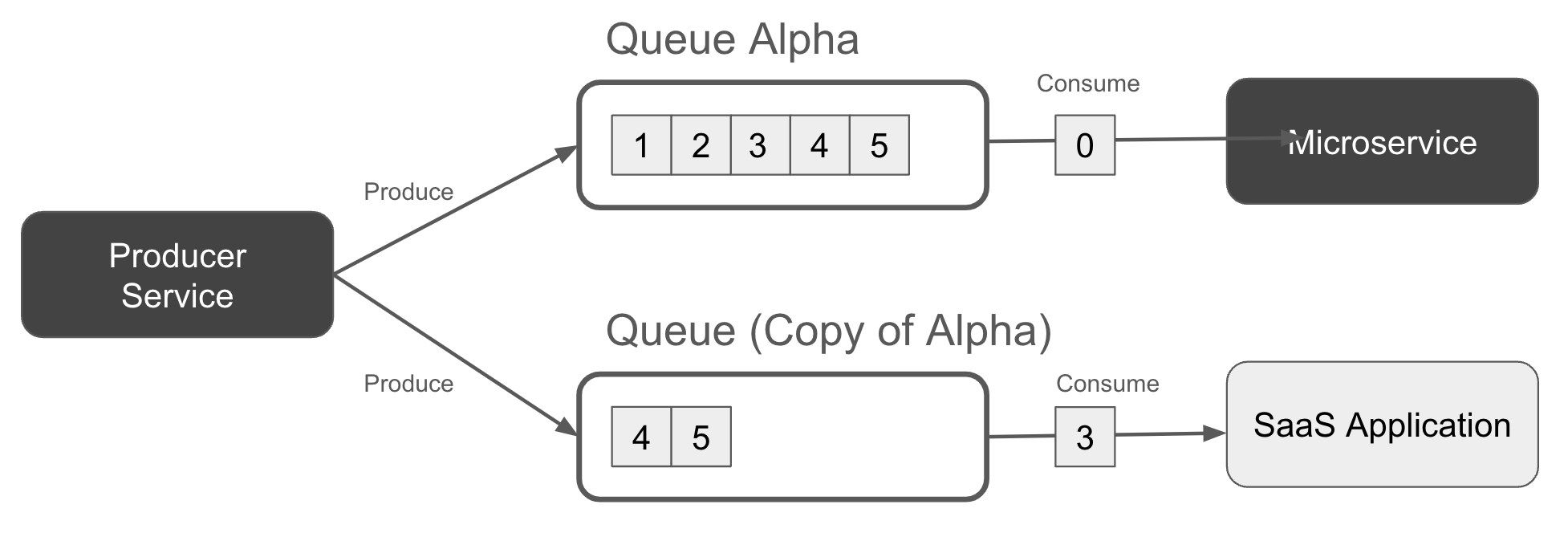

If multiple independent consumers (e.g. microservice applications) need access to the records in the queue (say in fulfilling a Sales event), then you’d have to either use an event stream, or create a queue for each consuming service. Figure 1-4 shows an example where the producer service writes a copy of each record to two different queues to ensure that each consumer has a copy of the data to process.

Figure 1-4. A producer writing to two duplicate queues, one or each consumer application.

Warning

Your producer service may not be able to atomically write to more than one queue at a time. You’ll need to review the limitations of your broker’s multi-queue write capabilities. A failure to do so may see the queues diverge from one another over time, as race conditions and intermittent failures may see a record written to one queue, but not the others.

Historically, queue brokers have limited the time-to-live (TTL) for storing records in the queue. Records not processed within a certain time frame are marked as dead, purged, and no longer delivered to the subscribers - even if they have not yet been processed! Similar to ephemeral communications, time-based retention and non-replayable data has influenced the false notion that brokers (queue and event alike) cannot be used to retain data indefinitely.

With that being said, it is important to note that low TTLs are no longer the norm, at least for the more modern queue technologies. For example, both RabbitMQ and ActiveMQ let you set unlimited TTL for your records, letting you keep them in the queue for as long as is necessary for your business.

Note

Modern queue brokers may also support replayability and infinite retention of records via durable append-only logs - effectively the same as an event stream. For example, both Solace and RabbitMQ Streams allow for individual consumers to replay queued records as though it were an event stream.

One last thing before we close out this section. Queues can also provide priority-based record ordering for its consumers, so that high priority records get pushed to the front, while low priority records remain in the back of the queue until all higher priority records are processed. Queues provide an ideal data structure for priority ordering, since they do not enforce a strict first-in, first-out ordering like an event stream.

Queues are best used as an input buffer for a specific downstream system. You can rely on a queue to buffer records that need processing by another system, allowing the producer application to get on with its other tasks - including writing more records to the queue. You can rely on the queue to durably store all the records until your consumer service can get to working on them. Additionally, your consumer can scale up its processing by registering new consumers on the queue, and sharing processing in a round-robin manner.

The Structure of an Event

Events, as written to an event stream, are typically represented using a key, a value, and a header. Together, these three components form the record representing the event.

The record’s exact structure will vary with your technology of choice. For example, queues and ephemeral-messaging tend to use similar yet different conventions and components, such as header keys, routing keys, and binding keys, to name a few. But for the most part, this following three-piece record format is generally applicable for all events.

- The key

-

The key is optional but extremely useful. It’s typically set to a unique ID that represents the data of the event itself, akin to a primary key in a relational database. It is also commonly used to route the event to a specific partition of the event stream. More on that later in this chapter.

- The value

-

Contains the bulk of the data relating to the event. If you think of the event key as the primary key of a database table’s row, then think of the value as all the other fields in that row. The value carries the majority of an event’s data.

- The header (also known as “record properties”)

-

Contains metadata about the event itself, and is often a proprietary format depending on the event broker. The record is usually used to record information such as datetimes, tracking IDs, and user-defined key-value pairs that aren’t suitable for the value.

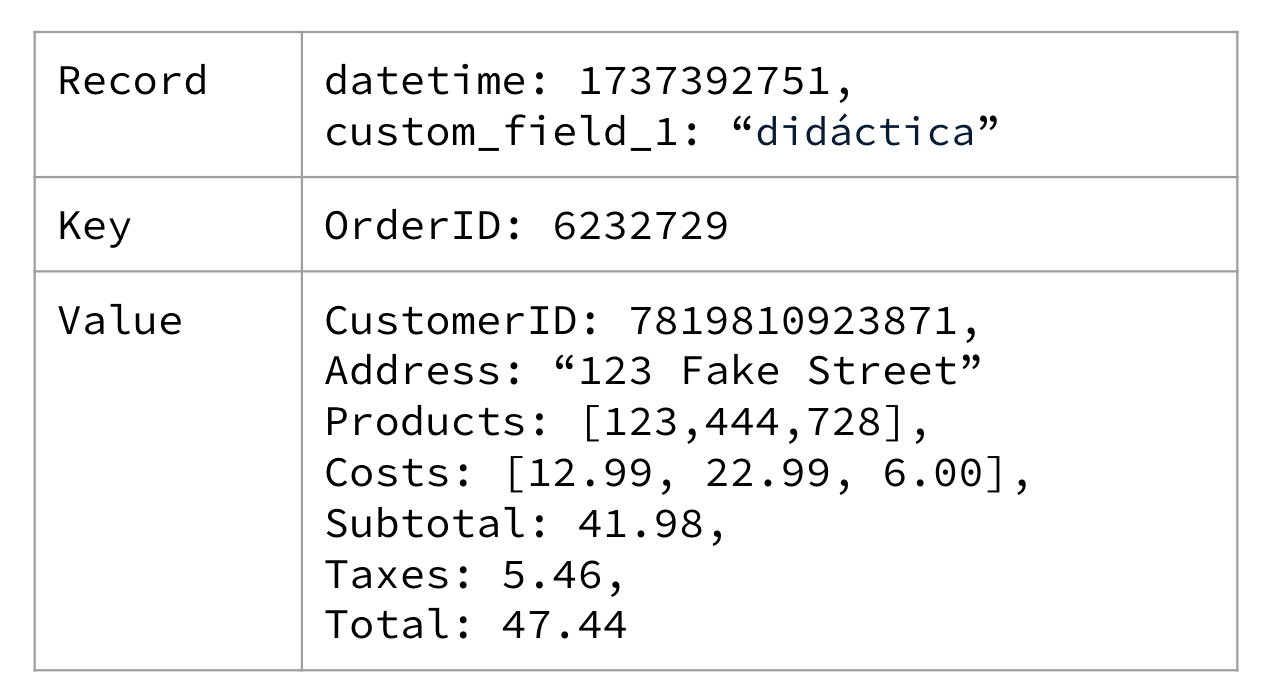

An example of the record structure is shown in Figure 1-5, containing a minimal set of details pertaining to an e-commerce order.

Figure 1-5. A simple e-commerce order showing the items purchased by a user, along with the total cost

Events are immutable. You can’t modify an event once it is published to the event stream. Immutability is an essential property for ensuring that consumers each have access to exactly the same data. Mutating data that has already been read by several consumers is of no benefit, since there is no way to easily notify them that they must make a change to data that they’re already read. You can, however, create and publish a new events containing the necessary corrections or updates, covered more in chapter [Link to Come].

Events tend to fall into three main classifications: unkeyed events, keyed events, and entity events. Let’s take a look closer look at each.

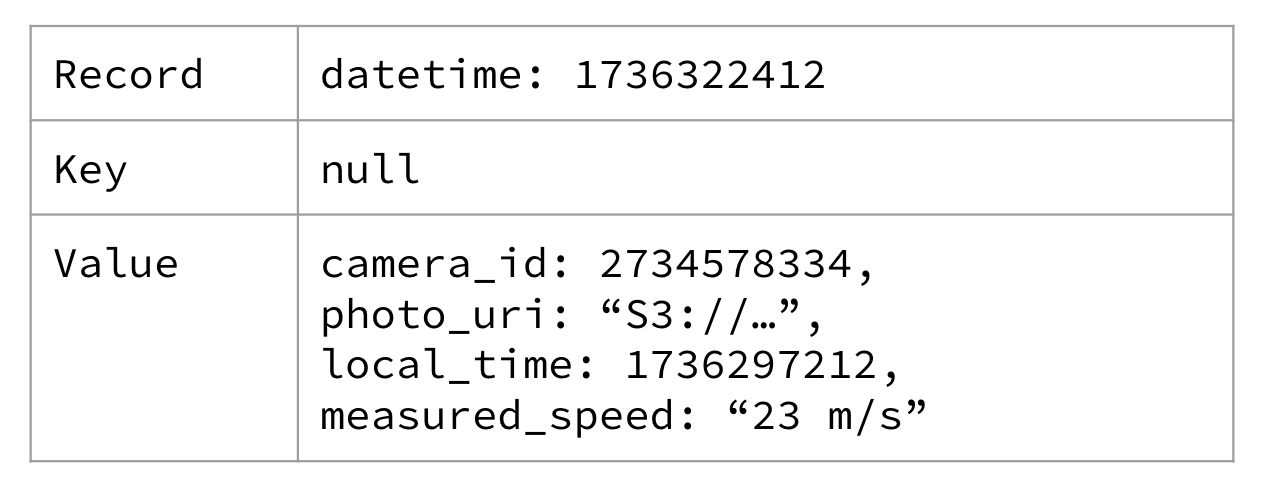

Unkeyed Event

Unkeyed events do not contain a key. They’re generally considered to be fully independent of one another. There is no special treatment for routing to a particular partition.Unkeyed events are commonly some form of raw measurement. For example, a camera that photographs an automobile running through a red light may creates an unkeyed event, as shown in Figure 1-6

Figure 1-6. An unkeyed red light traffic camera event

Could the red light camera have produced the event with the camera_id as the key? Sure! But it didn’t, because it only records the data in that specific format, and this specific camera doesn’t support custom post-processing. You get the event in the format that it specifies in the user manual.

If we want to add a key, we’re going to have to do some additional processing and emit a new event stream, as you’ll see in this next section.

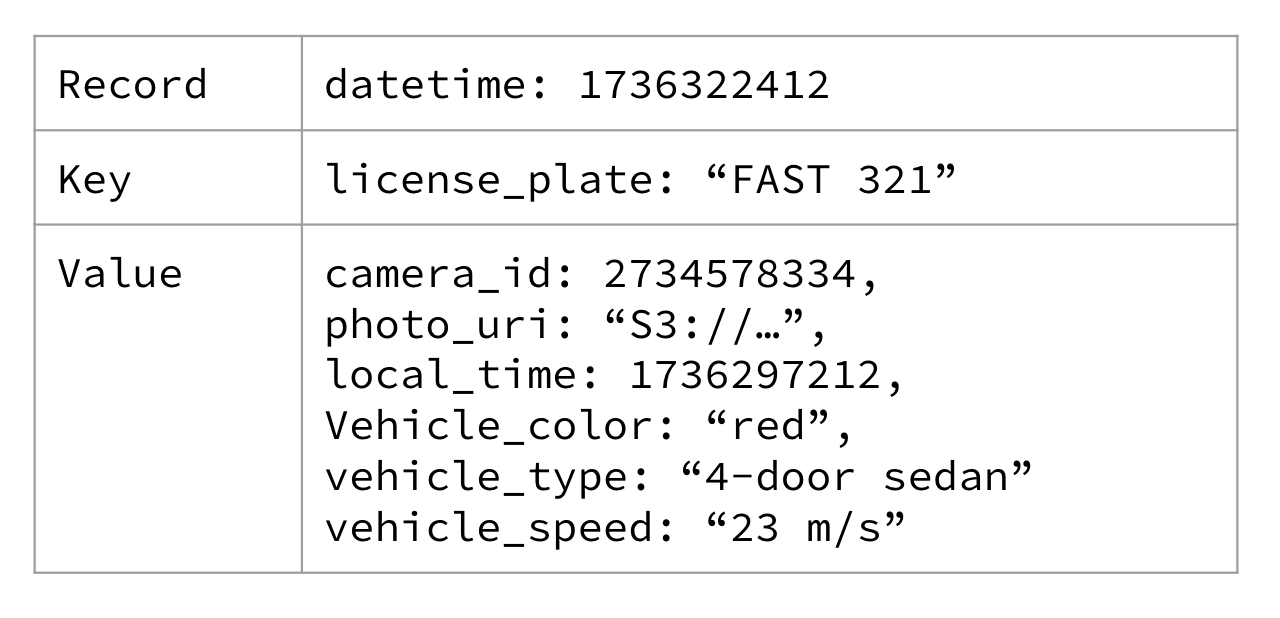

Keyed Events

Keyed events contain a non-null key related to something important about the event. The event key is specified by the producer service when it creates the record, and remains immutable once written to the event stream.

If we were to apply a key to the red light traffic camera data, we may choose to use the driver’s license plate. You can see an example of that in Figure 1-7.

Figure 1-7. A red light traffic camera event keyed on the driver’s license plate

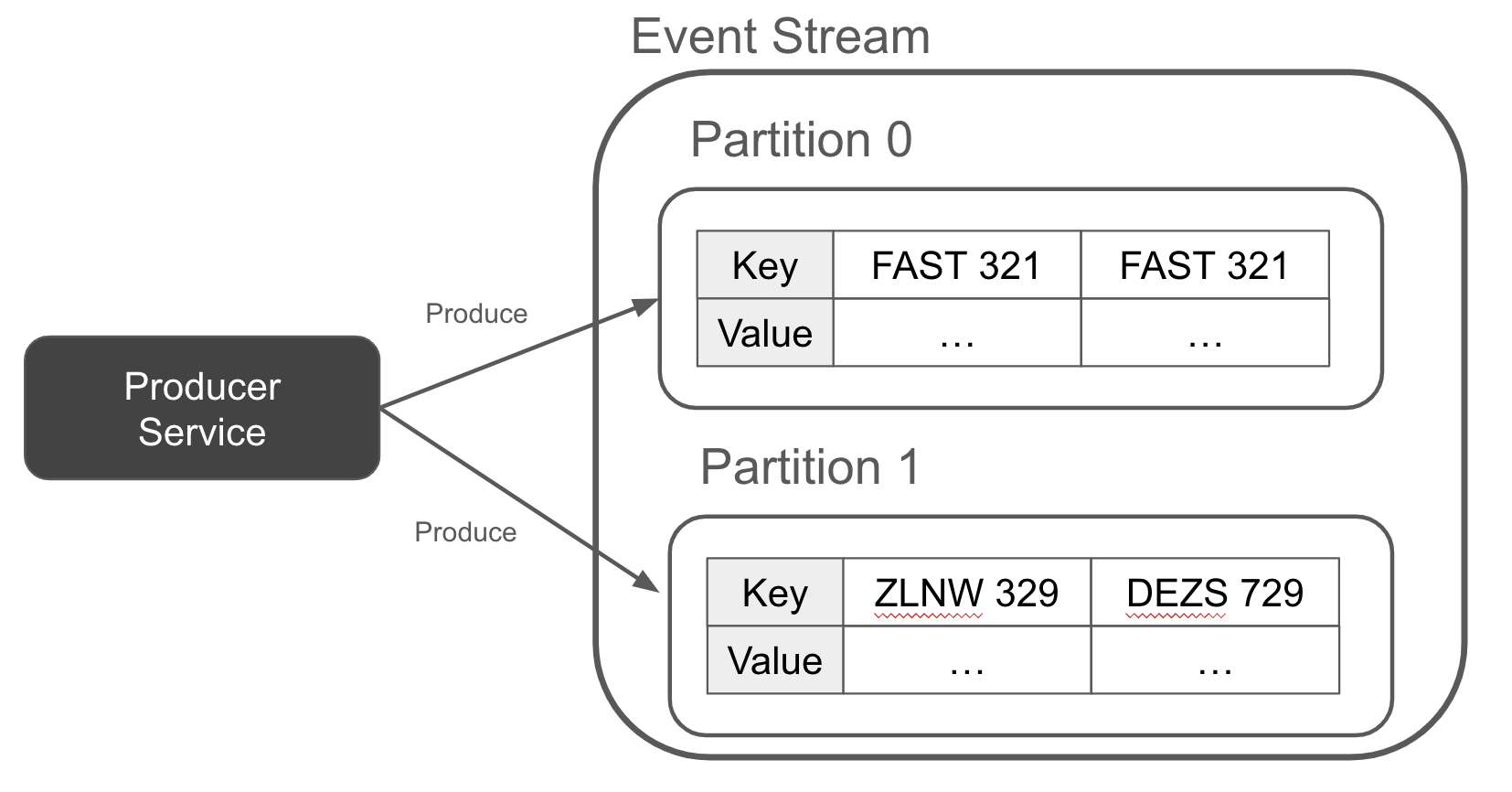

A key enables the producer to partition the records deterministically, with all records of the same key going to the same event stream partition, as per Figure 1-8. This provides your event consumers with a guarantee that all data of a given key will be in just a single partition. In turn, your consumers can easily divide up the work on a per-partition basis, knowing that any key-based work they perform will only rely on reading a single partition, and not all the events from all partitions.

Figure 1-8. A red light traffic camera event keyed on the driver’s license plate

As illustrated in the partitioned topic, you can see that there are at least two events for FAST 321. Each of these events represents an instance of the car running through a red light. Keep this in mind as we go to our final event classification, the entity event.

Entity Event

An entity event represents a unique thing, and is keyed on the unique ID of that thing. It describes the properties and state of the entity at a specific point in time. Entity events are also sometimes called state events, as they represent the state of a given thing at a given point in time.

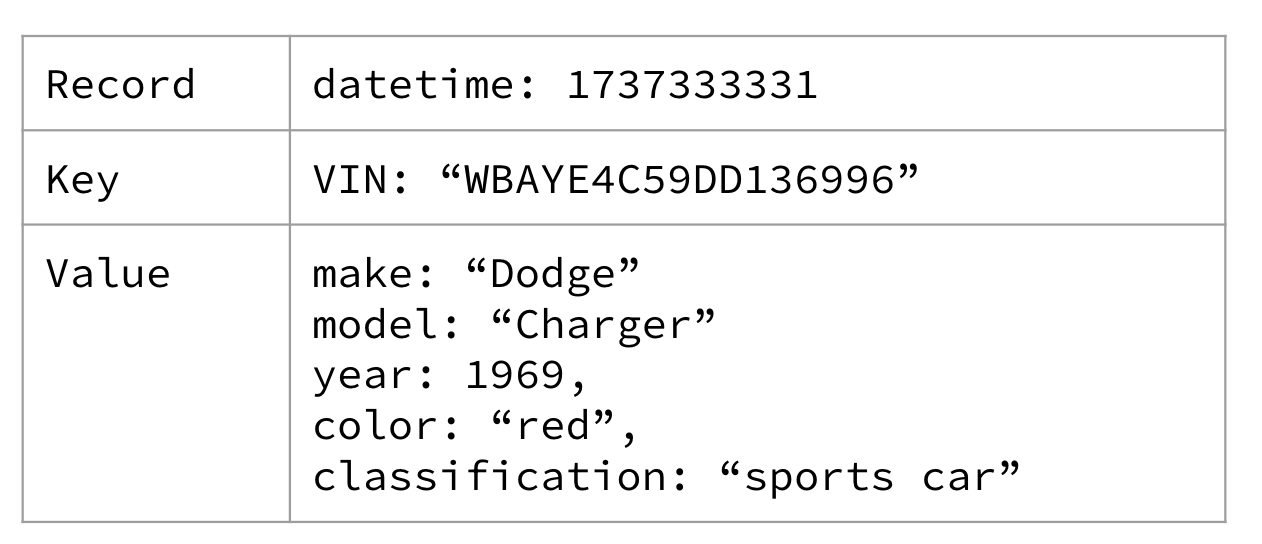

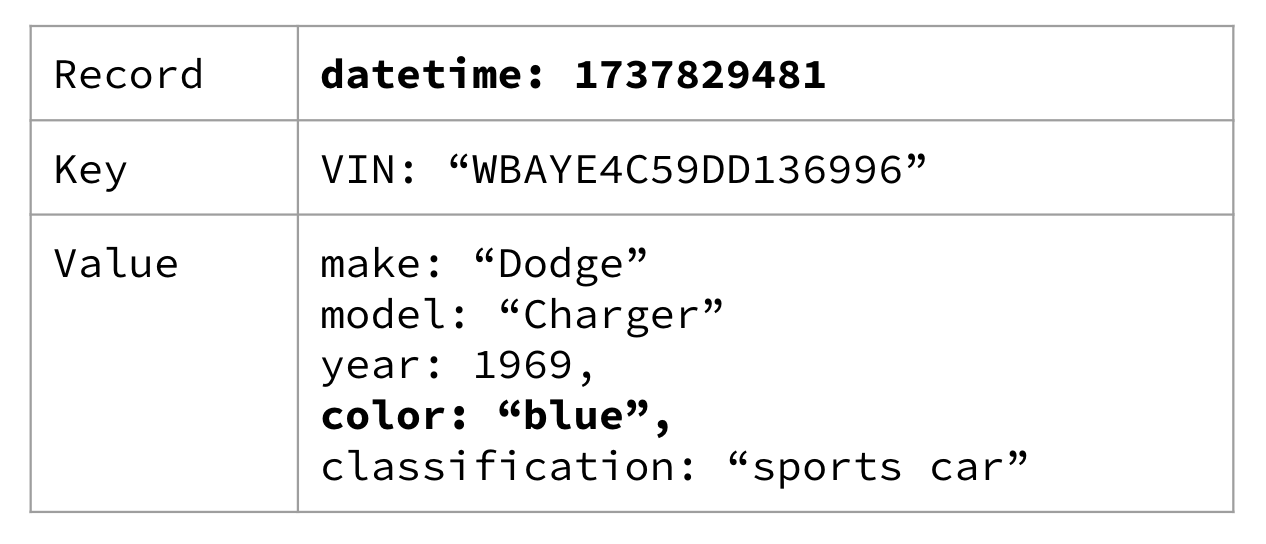

For something a bit more concrete, and to continue the red light camera analogy, you could expect to see a Car as an entity event. You may also see entity events for Driver, Tire, Intersection, or any other number of “things” involved in the scenario. A car entity is featured in Figure 1-9.

Figure 1-9. A Car entity describing the sports car that keeps running the red lights

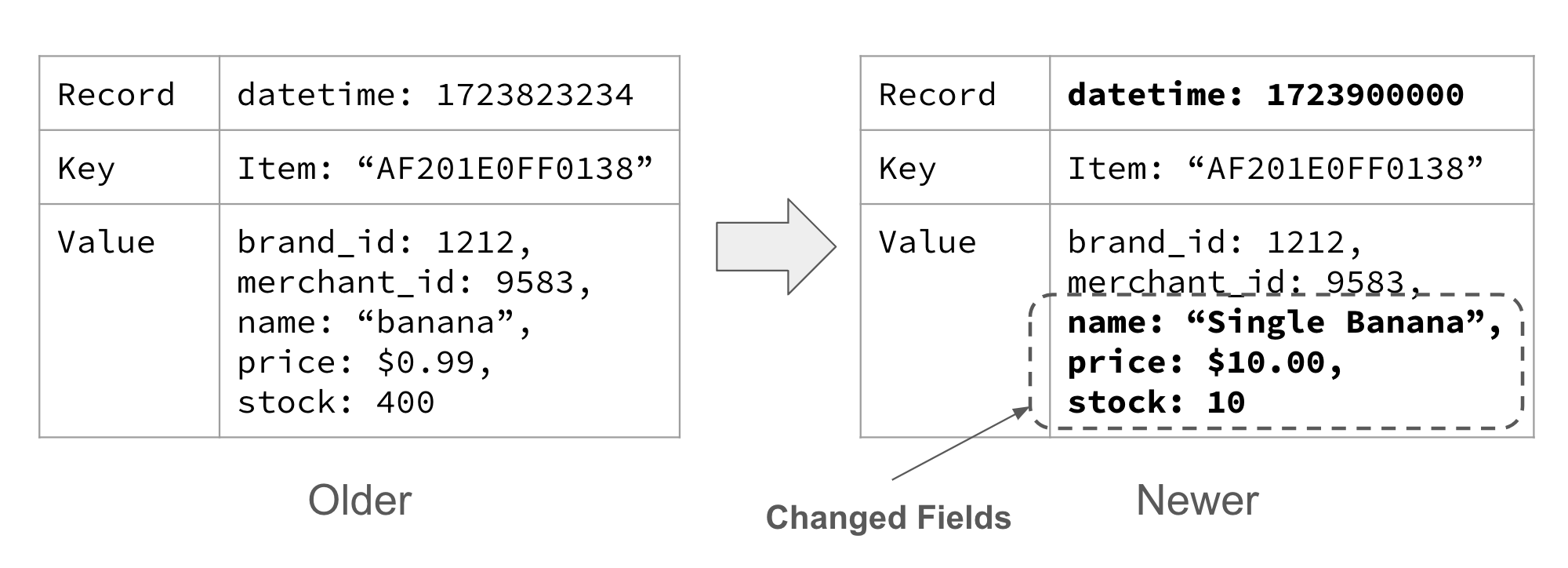

You may find it helpful to think of an entity event like you would think of a row in a database table. Both have a primary key, and both represent the data for that primary key as it is at the current point in time. And much like a database row, the data is only valid for as long as the data remains unchanged. Thus, if you were to repaint the car to blue, you could expect to see a new event with the color updated, as in Figure 1-10.

Figure 1-10. The car has been repainted blue

You may also notice that the datetime field has been updated to represent when the car was painted blue (or at least when it was reported). You’ll also notice that the entity event also contains all the data that didn’t change. This is intentional, and it actually allows us to do some pretty powerful things with entity events - but we’ll cover that in a bit more detail in Chapter 3.

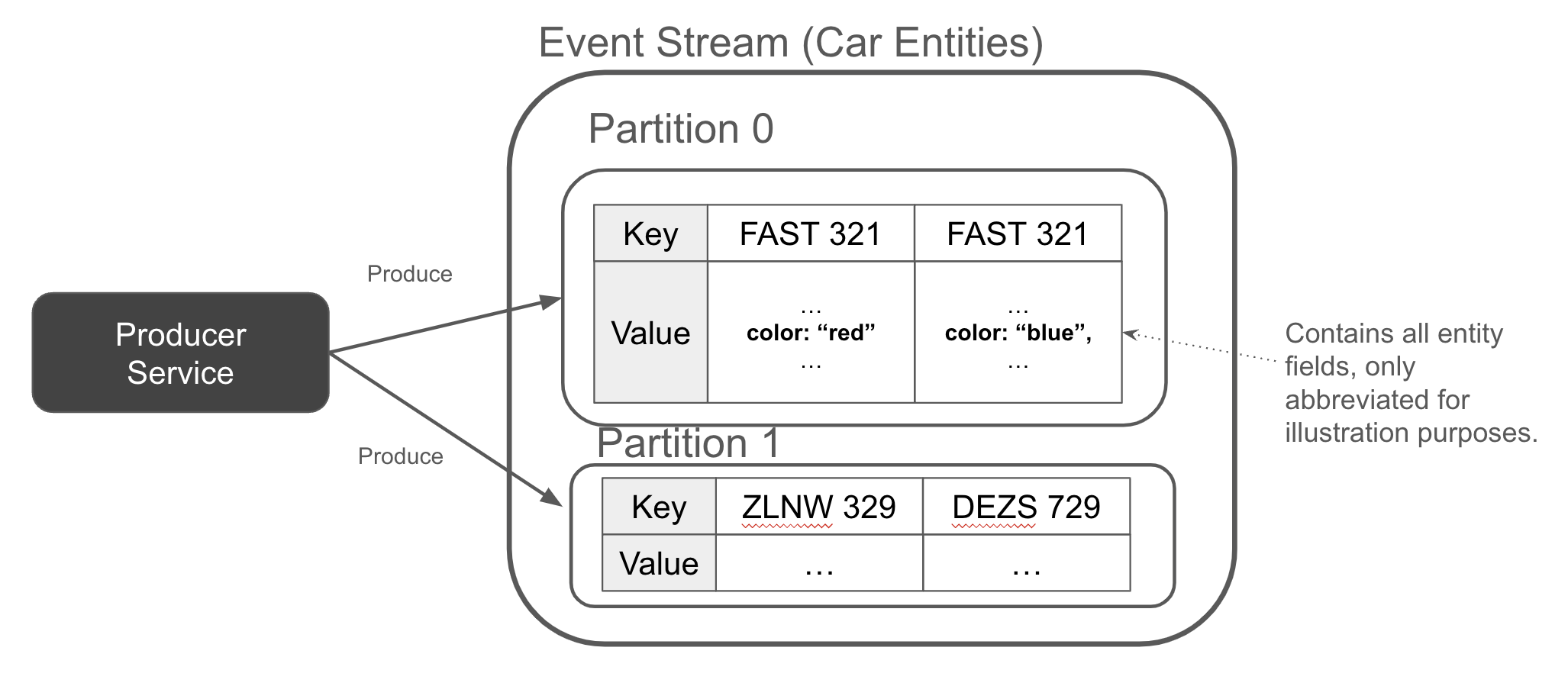

Similarly to how keyed events each go to the same partition, the same is true for entity events. Figure 1-11 shows an event stream with two events for the FAST 321 - one when it was red (the oldest event), and one while it is blue (the latest event, appended to the tail of the stream).

Figure 1-11. The producer appends a full entity event whenever an entity is created, updated, or deleted

Entity events are particularly important in event-driven architectures. They provide a continual history of the state of an entity and can be used to materialize state (covered in the next section).

There is more to event design than what we’ve covered in this chapter so far, but we’re going to defer going deeper into that until chapter [Link to Come]. Instead, lets look at how we might use these events that we’ve just introduced to build real world event-driven microservices.

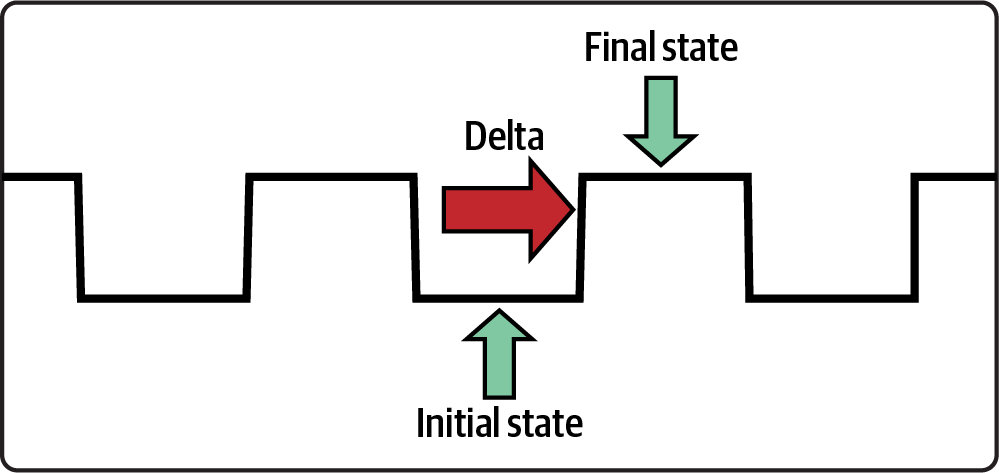

Aggregating State from Keyed Events

An aggregation is the process of consuming two or more events and combining them into a single result. Aggregations are a common data processing primitive for event-driven architectures, and are one of the primary ways to generate state out of a series of events. Building an aggregation requires storing and maintaining durable state, such that aggregation progress is persisted if the service fails. State and recovery are covered in chapter [Link to Come].

The keyed event plays an important role in aggregations, since all the data of the same key is in the same partition. Thus, you can simply aggregate a single key by reading a single partition. If you’re reading multiple topics, then you’ll need to ensure that they’re partitioned identically - otherwise you’re going to have to repartition the data so that the streams match one another. Repartitioning and co-partitioning are covered further in chapter [Link to Come].

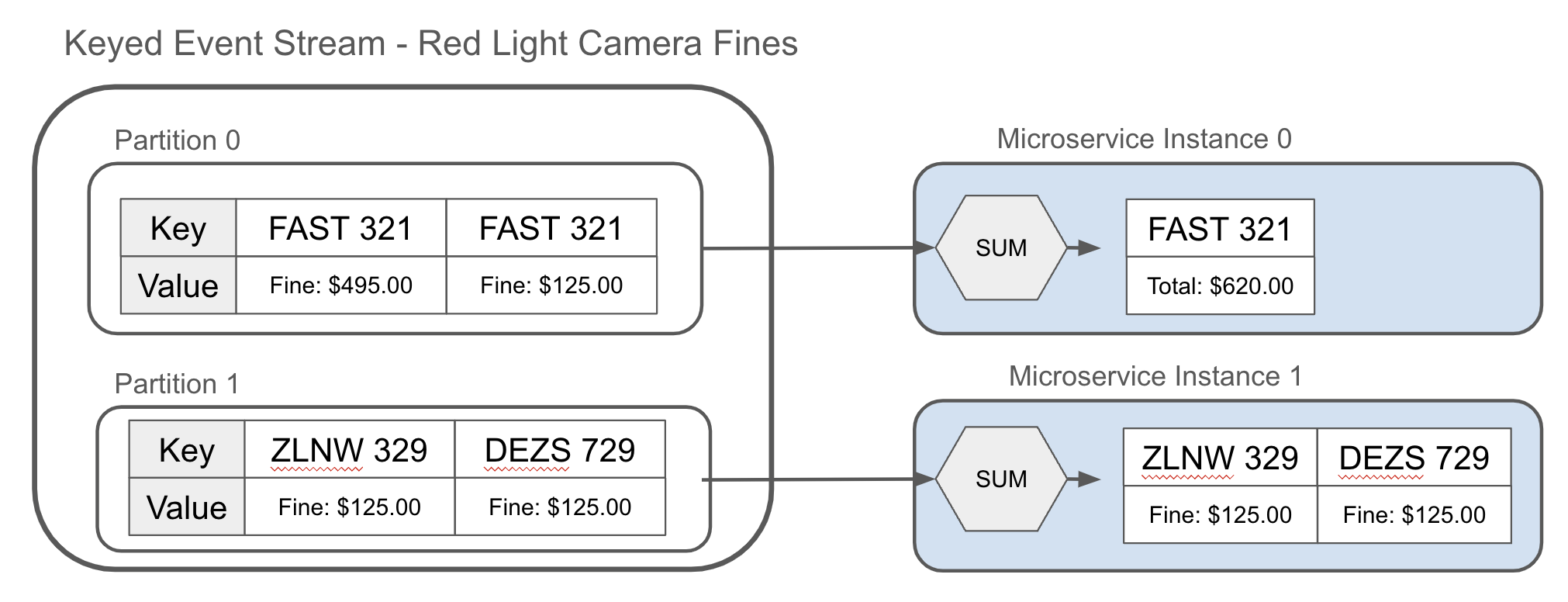

An aggregation may be as simple as a sum, as shown in Figure 1-12. For example, summing up the traffic infraction tickets that have been issued to the owner of a speeding sports car, and computing the total of the amount owed.

Figure 1-12. Aggregating the keyed events of the red light camera fines

Aggregations may also be more complex, incorporating multiple input streams, multiple event types, and internal state machines to derive more complex results. We’ll revisit aggregations throughout the book, but for now, let’s take a look at materializations.

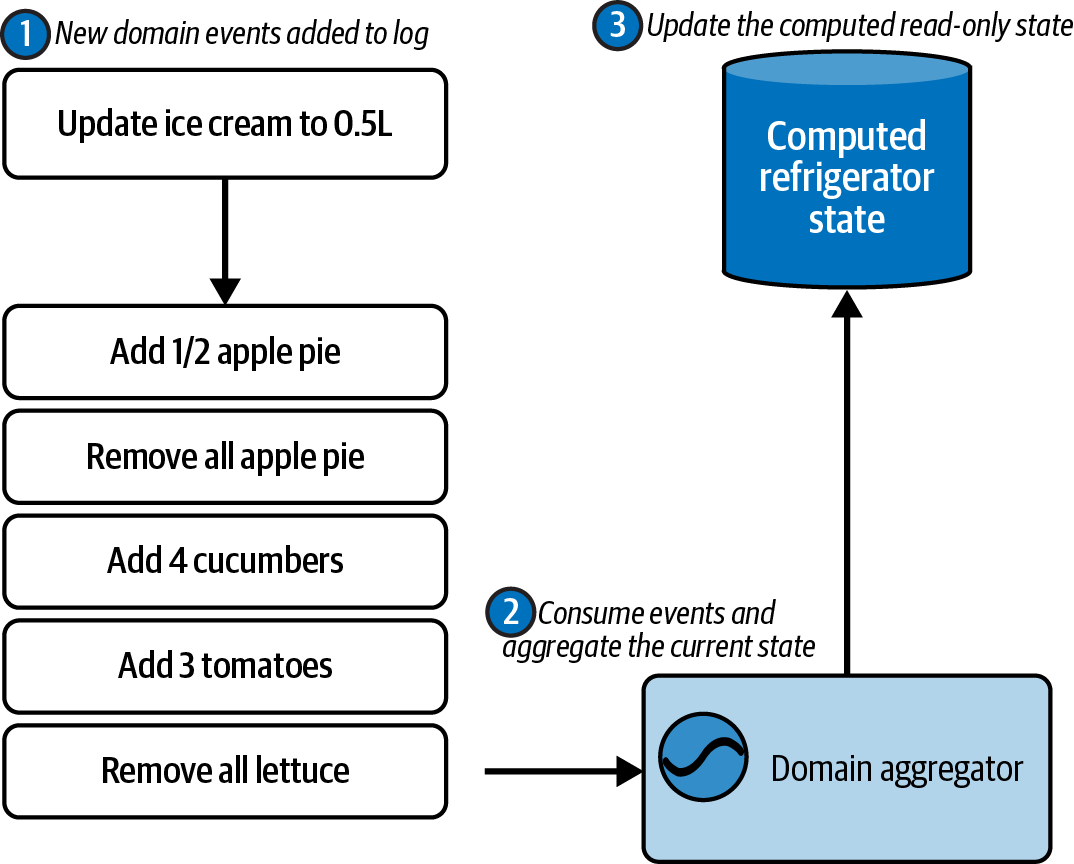

Materializing State from Entity Events

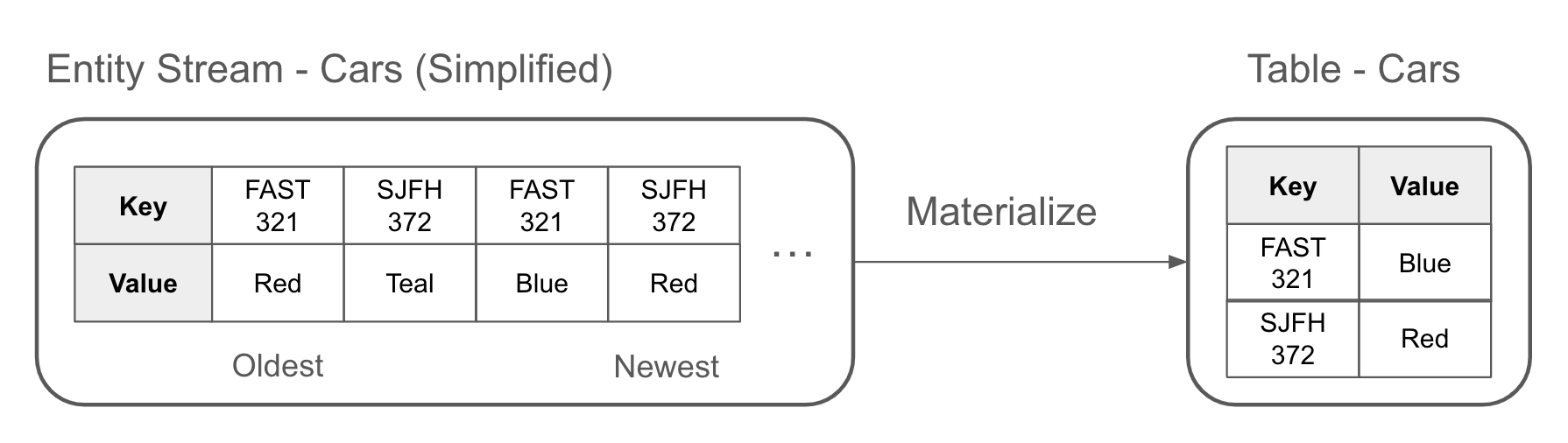

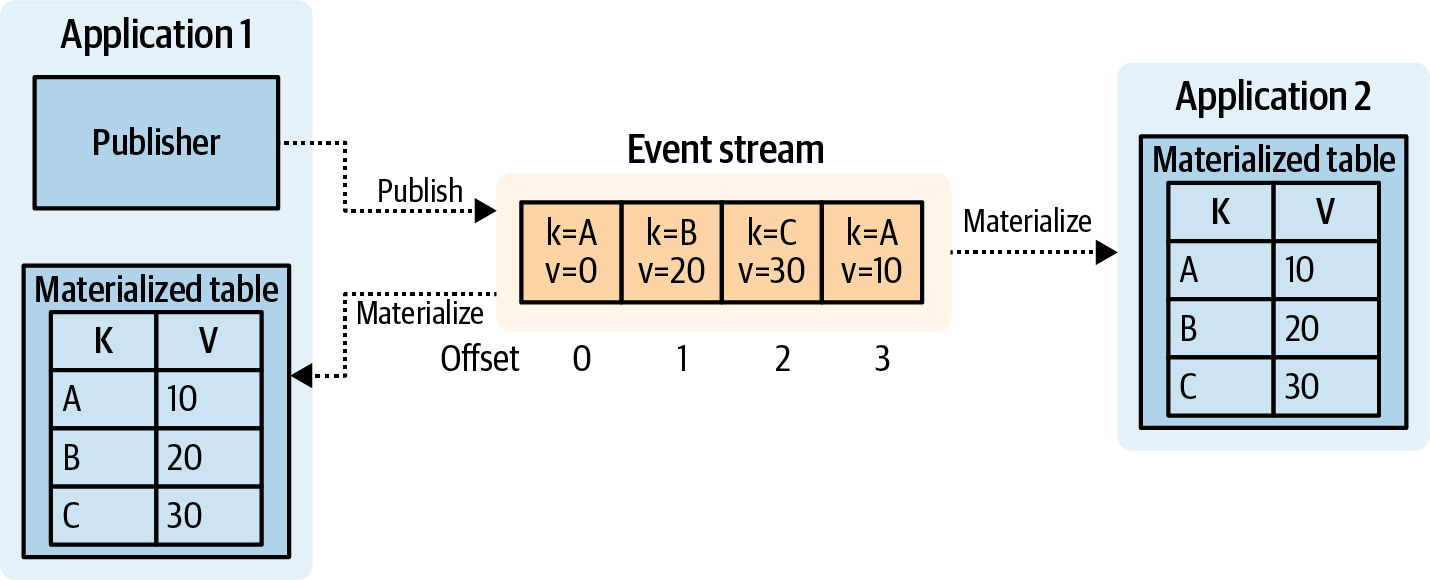

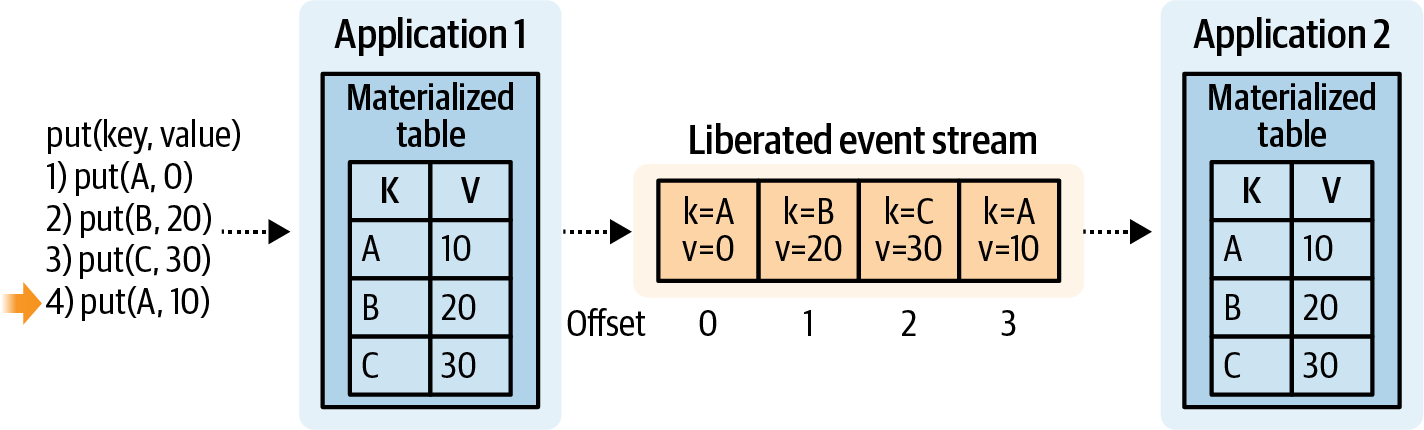

A materialization is a projection of a stream into a table. You materialize a table by applying entity events, in order, from an entity event stream. Each entity event is upserted into the table, such that the most recently read event for a given key is represented. This is illustrated in Figure 1-13, where FAST 321 and SJFH 372 both have the newest values in their materialized table.

Figure 1-13. Materializing an event stream into a table

You can also convert a table into a stream of entity events by publishing each update to the event stream.

Tip

Stream-Table duality is the principle that a stream can be represented by a table, and a table can be represented as a stream. It is fundamental to the sharing of state between event-driven microservice, without any direct coupling between producer and consumer services.

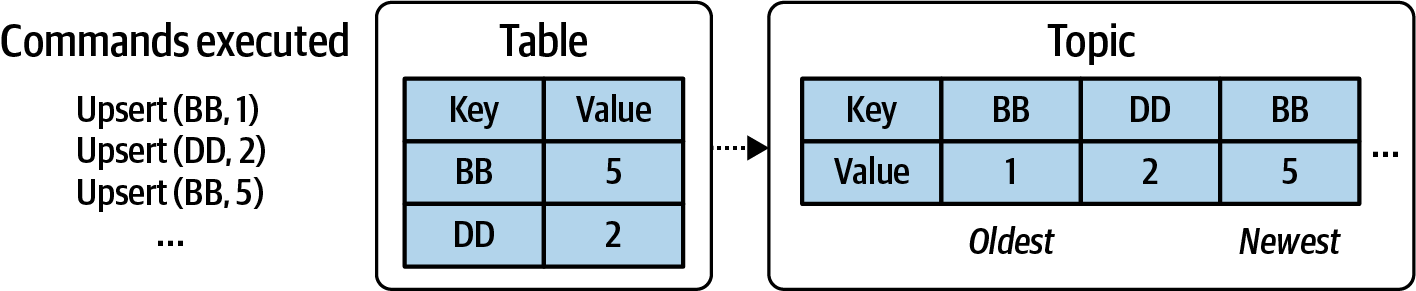

In the same way, you can have a table record all updates and in doing so produce a stream of data representing the table’s state over time. In the following example, BB is upserted twice, while DD is upserted just once. The output stream in Figure 1-14 shows three upsert events representing these operations.

Figure 1-14. Generating an event stream from the changes applied to a table

Deleting Events and Event Stream Compaction

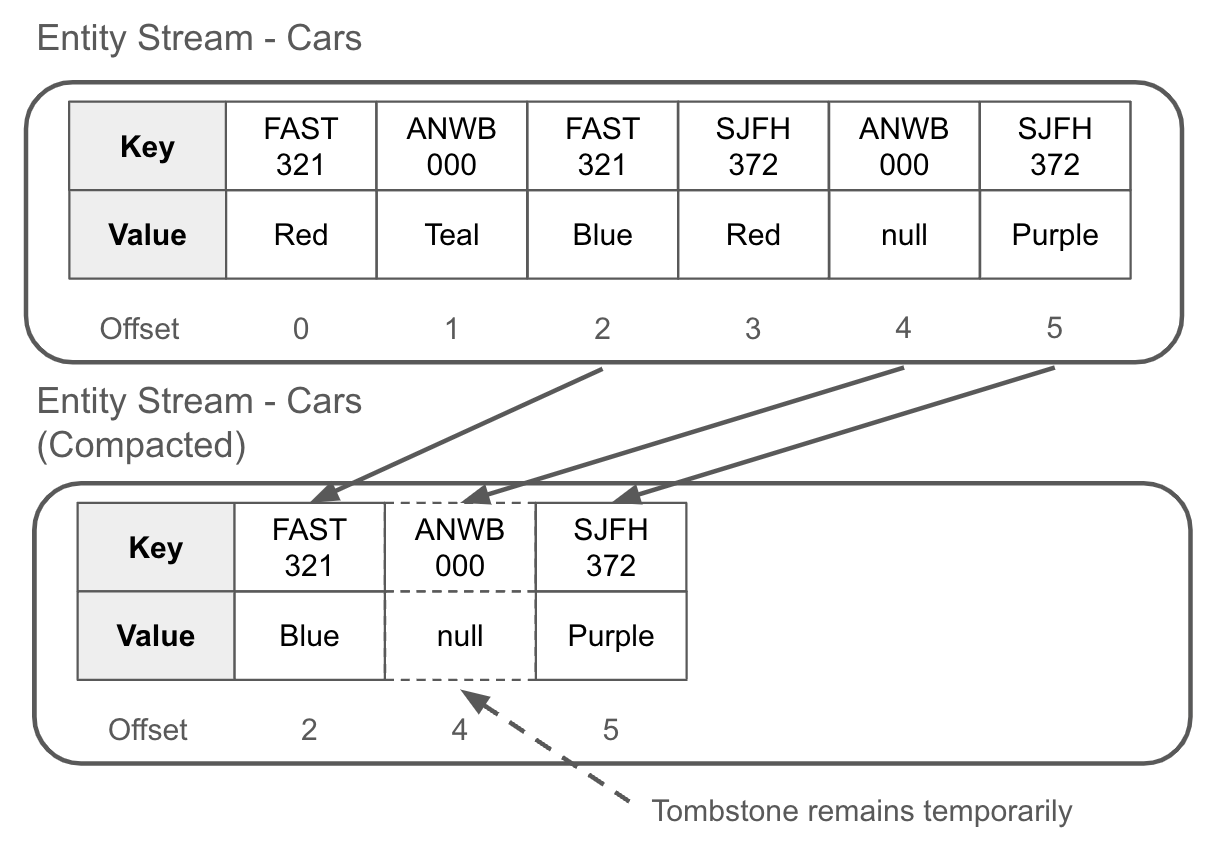

First, the bad news. You can’t delete a record from an event stream as you would a row in a database table. A major part of the value proposition of an event stream is its immutability. But you can issue a new event, known as a tombstone, that will allow you to delete records with the same key. Tombstones are most commonly used with entity event streams.

A tombstone is a keyed event with its value set to null, a convention established by the Apache Kafka project. Tombstones serve two purposes. First, they signal to the consumer that the data associated with that event key should now be considered deleted. To use the database analogy, deleting a row from a table would be equivalent to publishing a tombstone to an event stream for the same primary key.

Secondly, tombstones enable compaction. Compaction is an event broker process that reduces the size of the event streams by retaining only the most recent events for a given key. Events older than the tombstone will be deleted, and the remaining events are compacted down into a smaller set of files that are faster and easier to read. Event stream offsets are maintained such that no changes are required by the consumers. Figure 1-15 illustrates the logical compaction of an event stream in the event broker.

Figure 1-15. After a compaction, only the most recent record is kept for a given key — all predecessors records of the same key are deleted

The tombstone record typically remains in the event stream for a short period of time. In the case of Apache Kafka, the default value of 24 hours gives consumers a chance to read the tombstone before it too is (asynchronously) cleaned up.

Compaction is an asynchronous process performed only when a certain set of criteria is met, including dirty ratio, or record count in inactive segments. You can also manually trigger compaction. The precise mechanics of compaction vary with event broker selection, so you’ll have to consult your documentation accordingly.

Compaction reduces both disk usage and the quantity of events that must be processed to reach the current state, at the expense of eliminating a portion of the event stream history. Comapaction typically provides several useful configurations and guarantees, including:

- Minimum Compaction Lag

-

You can specify the minimum amount of time a record must live in the event stream before it is eligible for compaction. For example, Apache Kafka provides a min.compaction.lag.ms property on its topics. You can set this to a reasonable value, say 24 hours or 7 days, to ensure that your consumers can read the data before it is compacted away.

- Offset Guarantees

-

Offsets remain unchanged before, during, and after compaction. Compaction will introduce gaps between sequential offsets, but there remains no consequences for consumers sequentially consuming the event stream. Trying to read a specific offset that no longer exists will result in an error.

- Consistency Guarantees

-

A consumer reading from the start of a compacted topic can materialize exactly the same table as a consumer that has been running since the beginning.

Caution

While you may be able to find tools that allow you to delete records from an event stream manually, be very careful. Manually deleting records can lead to unexpected results, particularly if you have consumers that have already read the data. Simply deleting the records won’t fix the consumers derived state. Prevention of bad data is essential, and we’ll cover that more in chapter [Link to Come].

Stream-table duality and materialization allow our services to communicate state between one another. Compaction lets us keep our event streams to a reasonable size, in line with the domain of the data.

The Kappa Architecture

The Kappa architecture was first presented in 2014 by Jay Kreps, cocreator of Apache Kafka and cofounder of Confluent. The Kappa architecture relies on event streams as the sole record for both current and historical data. Consumers simply start consuming from the start of the stream to get a full picture of everything that has happened since inception, eventually reaching the head of the stream and the latest events as per Figure 1-16.

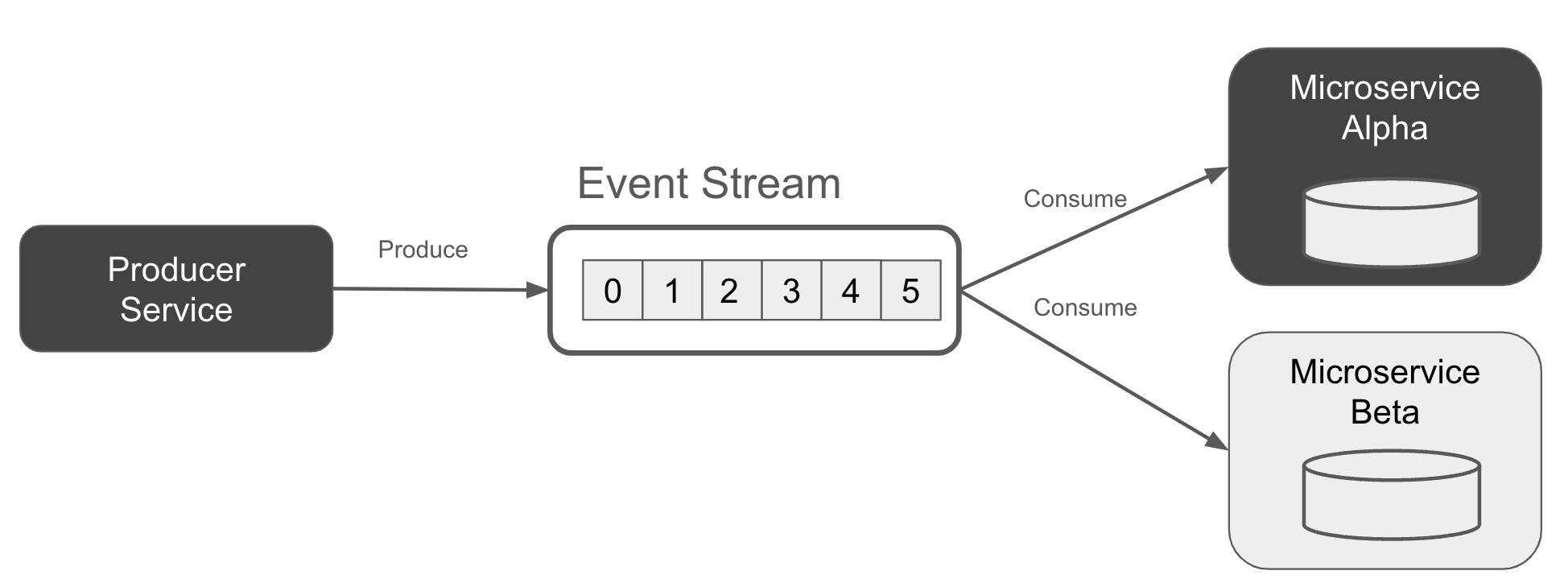

Figure 1-16. Kappa Architecture, with each service building its state from just an event stream

Kappa architectures do not use a secondary store for historical data, as is the case in the Lambda architecture (more on that in the next section). Kappa relies entirely on the event stream for storage and serving.

Kappa architectures have only been realized with modern event brokers, in combination with cheap storage ushered in with the Cloud computing revolution. It is affordable and easy to store as much data as you need in the stream, and you are no longer limited by broker technologies that applied mandatory TTLs, deleting your records after a few hours or days.

Event-driven microservices simply materialize their own state from the streams as they need. There is no need to access data from a secondary store somewhere else, nor any of the complexity overhead in managing extra permissions. It’s clean, simple, and easy to code.

There are some trade-offs with Kappa. Each service must build its own state, and for extremely large data sets it may take some time to materialize from the event stream. A low partition count and insufficient parallelization may see your service take many hours or days to materialize. This is often called “hydration time”, and you’ll need to plan for it in your service life cycle.

You can mitigate the hydration time problem by maintaining snapshots or backups of your materialized and computed state. Then, when loading your application, your service simply loads from its backed up data and restores processing from where it left off. While this is a responsibility of the application itself, snapshots and backups come with leading stream-processing technologies such as Apache Kafka Streams, Apache Flink, and Apache Spark Streaming, just to name a few of the open source industry leaders.

The Kappa architecture is key to building decoupled event-driven microservices as it provides your services with a single powerful guarantee - that your event stream can act as a single source of truth for a given set of data. There is no need to go elsewhere to a secondary or tertiary store. All the data you need is in that event stream, ready to go for your services.

What does the Kappa architecture look like in code?

Example 1-1 shows a Kafka Streams

application with twoKTables, which is just a stream materialized into a table using ECST. Next, the inventory KTable and the sales KTable are joined using a non-windowed INNER join to create a KTable of denormalized and enriched item inventory. Stream-processing frameworks make it very easy to handle event streams, build up internal state using ECST, and merge and join data from various data products, in just a few lines of code. Example 1-1. Showcasing joins with Kafka Streams

StreamsBuilderbuilder=newStreamsBuilder();//Materializes the tables from the source Kafka topicsKTableproducts=builder.table("products")KTableproductReviews=builder.table("product_reviews")//Join events on the primary key, apply business logic as neededKTableproductsWithReviews=products.join(productReviews,businessLogic(..),...)builder.build();

Apache Flink can provide something similar. Let’s say we wanted something more SQL-like.

Example 1-2. Showcasing joins with Flink SQL

CREATETABLEPRODUCTS(product_idBIGINT,nameVARCHAR,brandVARCHAR,descriptionVARCHAR,timestampTIMESTAMP(3),PRIMARYKEY(product_id)NOTENFORCED,)WITH('connector'='kafka','topic'='products','format'='protobuf','properties.bootstrap.servers'='localhost:9092','properties.group.id'='foobar');CREATETABLEPRODUCT_REVIEWS(product_idBIGINT,reviewsVARCHAR,timestampTIMESTAMP(3),PRIMARYKEY(product_id)NOTENFORCED,)WITH('connector'='kafka','topic'='product_reviews','format'='protobuf','properties.bootstrap.servers'='localhost:9092','properties.group.id'='foobar');CREATETABLEPRODUCTS_WITH_REVIEWSASSELECT*FROMPRODUCTSINNERJOINPRODUCT_REVIEWSONPRODUCTS.product_id=PRODUCT_REVIEWS.product_id;

Both the Flink SQL and the Kafka Streams code samples are simple, clear, and concise. We’ll cover building microservices with these technologies later in this book. But for now, as we go to look at the Lambda architecture, just keep in mind how easy it is to leverage the Kappa architecture. It just takes a few lines of code to transform a stream of events into a self-updating table capable of driving your microservice code.

The Lambda Architecture

The Lambda architecture relies on both an event stream for real-time data and a secondary repository for storage of historical data. The Lambda architecture is predicated on the outdated notion that you cannot store events in an event stream indefinitely. It is, however, consistent with the outdated technologies that enforced mandatory TTLs on event streams. It remains stubbornly persistent in the minds of many, and so we include it in this book for the sake of historical context, but not as an endorsement for use.

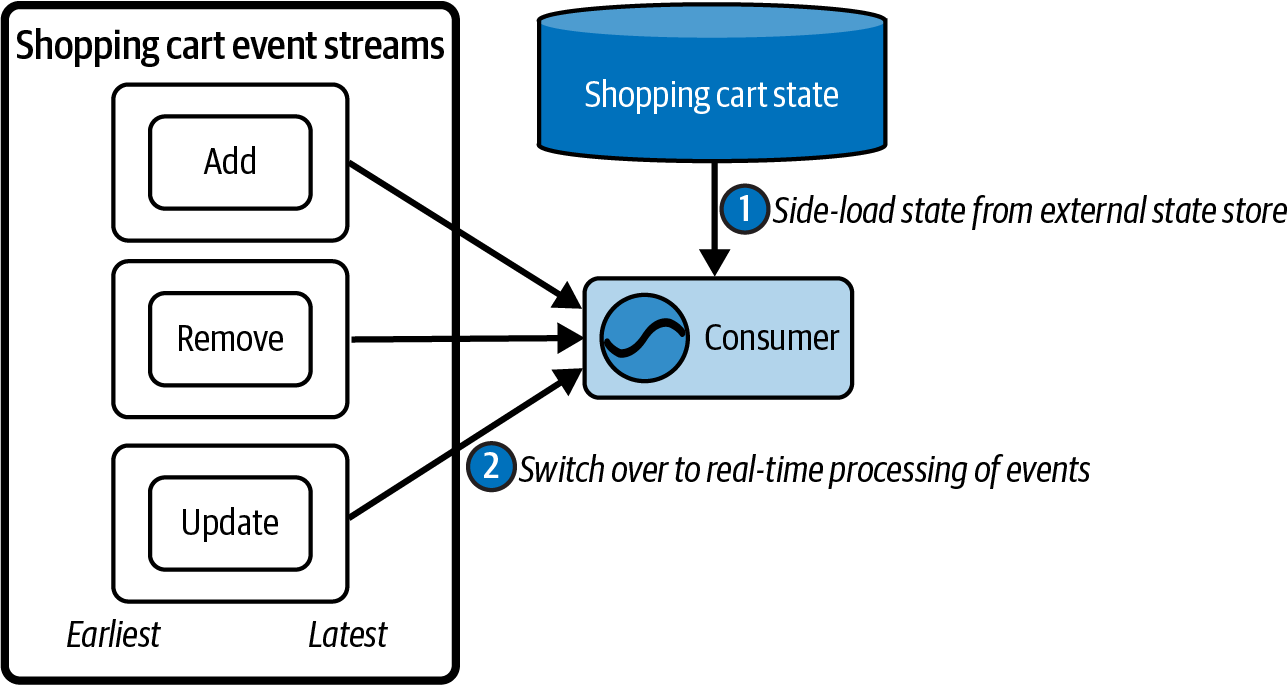

Consumers in a lambda architecture must obtain their historical data from the historical repository first, loading it into their respective state stores. Then, the consumers must swap over to the event stream for further updates and changes.

There are two main versions of this architecture. In the first, the historical repository and the stream are built independently. In the second, the historical repository is built from the stream. Let’s take a look at the first, first.

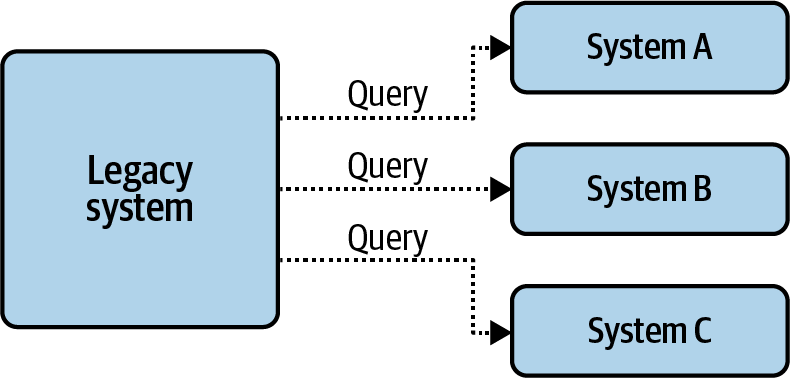

The historical repository contains the results of aggregations, materializations, or richer computations. It’s not just another location to store events. Historically speaking, the historical repository has often simply been just the internal database of the source system. In other words, you’d ask the source system for the historical data, load it in to your system, then swap over to the event stream. This is a bit of a anti-pattern as it puts the entire consumer load on the producer service, but it’s common enough that it’s worth mentioning.

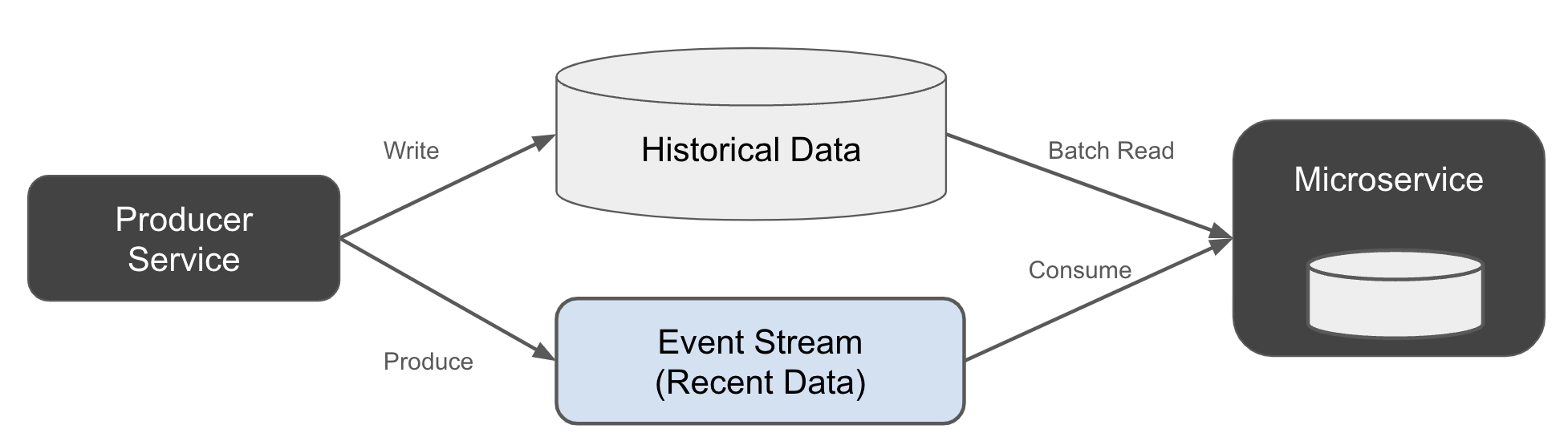

[Link to Come] shows a simplified implementation of the Lambda architecture. The producer writes new data to both the event stream and the historical data store.

Figure 1-17. Lambda architecture, writing to both the stream and the historical data table at the same time

A major flaw in this plan is that the data is not written atomically. The reality is that it’s very difficult to get high performance distributed transactions across multiple independent systems. What tends to happen in reality is that the producer updates one system first (say the historical data store), then the other (the event stream). An intermittent failure during the writes may see the event written to the historical store but not the stream - or vice verse, depending on your code.

The problem is that your stream and historical data set will diverge, meaning that you get different results than if you build from the stream than you would from the the historical data. An old consumer reading solely from the stream may compute a different result than a new consumer bootstrapping itself from the historical data. This can cause serious problems in your organization, and it can be very difficult to track down the reason why - particularly since the event stream data is time-limited, and evidence of its divergence is deleted after just a few days.

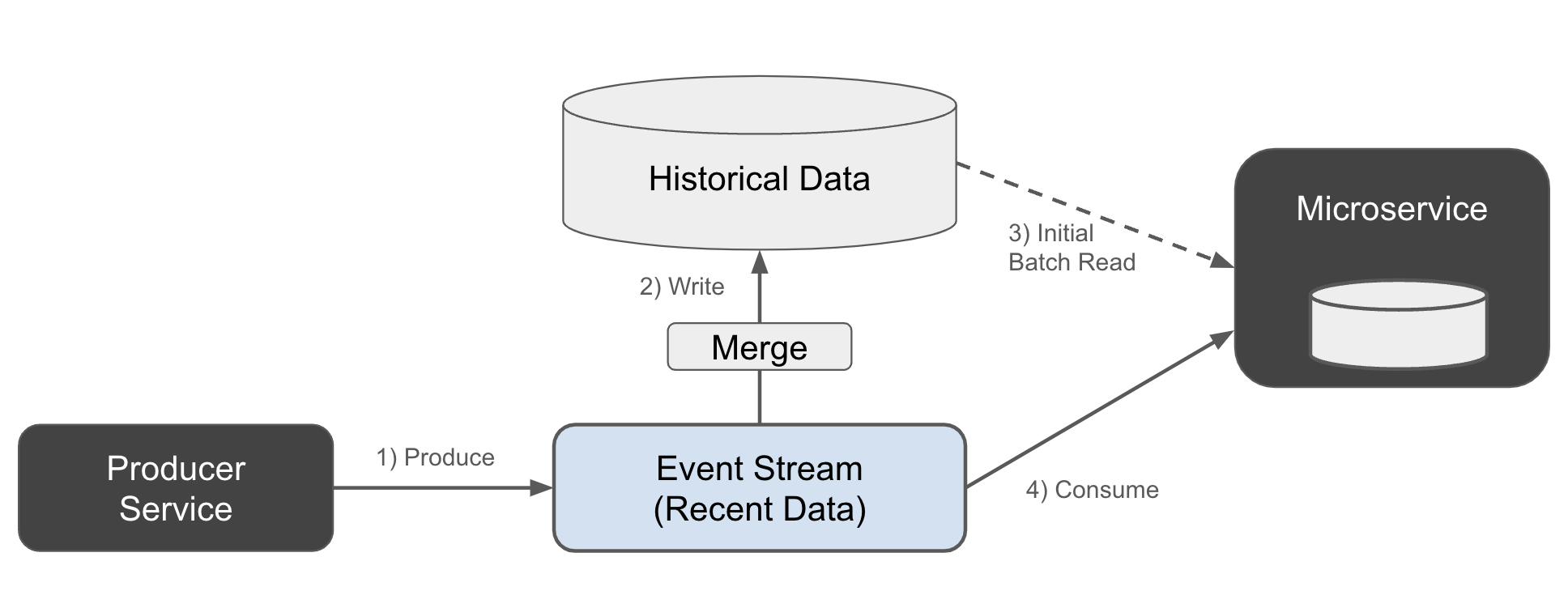

In the second version of Lambda architecture, the historical data is populated directly from the initial event stream, as shown in Figure 1-18.

Figure 1-18. Historical lambda data build from the event stream

The producer writes directly to the event stream (1). The historical data is populated by a secondary process that merges it into the data store (2). The consumer in turn reads the historical data first (3), then switches over to the event stream (4).

If you squint a little, you may find that this second version looks an awful lot like the Kappa architecture - except that we’re builing the state store outside of the microservice. The only complication is that we’ve introduced this awkward split between the event broker and the historic data store - an artifact due to the now-invalid notion that an event broker cannot store events indefinitely.

Overall, the Lambda architecture may seem simple in theory, but it ends up being very difficult to do well in practice. Why? Here are a few of the major obstacles, as we wrap this section up:

- The producer must maintain extra code

-

The code that writes to the stream, and the code that writes to the historical store.

- The consumer must maintain two code paths

-

One path reads from the historical store, and one path reads from the stream. The consumer must write code that seamlessly switches from one to the other, without missing any data or accidentally duplicating data. This can be quite challenging in practice, particularly with distributed systems and intermittent failures.

- Streamed data may not converge to the same results as the historical data

-

Consumers may not get exactly the same results if reading from the stream as the table. The producer must ensure that the data between the two does not diverge over time, but this is challenging without atomic updates to both the stream and the historical data store.

- The stream and historical data models must evolve in sync

-

Data isn’t static. If you have to update the data format, it will require code changes in two places - the historical store and the stream.

- Merging multiple Lambda-powered data sets is almost impossible

-

It’s easy to merge multiple Kappa data sets, as illustrated in [Link to Come]. There is no crossover from one source to another, so you can simply stream the data from the event stream and get consistent results. But there’s no natural governance between the stream and the historical data set. Event stream records and historical data will overlap, the rules for merging the former into the latter will differ by data set, as will rules for expiring records from the event stream.

Reconciling the dual storage of stream and historical is difficult for just a single data set. It’s even more challenging when you bring multiple data sets into the mix, each with its own unique stream/historical data boundaries.

The long and the short of it is that Lambda architecture is simply too difficult to manage at any reasonable scale. It puts the entire onus of accessing data onto the consumer, forcing them to reconcile two the seams not only between the stream and the historical data, but also the steams and historial data seams of all the other data sets they want to process too. It is far easier, both cognitively and coding-wise, to rely on the Kappa architecture with the single stream source for providing historical data.

Event Data Definitions and Schemas

Event data serves as the means of long term and implementation agnostic data storage, as well as the communication mechanism between services. Therefore, it is important that both the producers and consumers of events have a common understanding of the meaning of the data. Ideally, the consumer must be able to interpret the contents and meaning of an event without having to consult with the owner of the producing service. This requires a common language for communication between producers and consumers and is analogous to an API definition between synchronous request-response services.Schematization selections such as Apache Avro and Google’s Protobuf provide two features that are leveraged heavily in event-driven microservices. First, they provide an evolution framework, where certain sets of changes can be safely made to the schemas without requiring downstream consumers to make a code change. Second, they also provide the means to generate typed classes (where applicable) to convert the schematized data into plain old objects in the language of your choice. This makes the creation of business logic far simpler and more transparent in the development of microservices. [Link to Come] covers these topics in greater detail.

Powering Microservices with the Event Broker

Event broker systems suitable for large-scale enterprises all generally follow the same model. Multiple, distributed event brokers work together in a cluster to provide a platform for the production and consumption of event streams and queues. This model provides several essential features that are required for running an event-driven ecosystem at scale:

- Scalability

-

Additional event broker instances can be added to increase the cluster’s production, consumption, and data storage capacity.

- Durability

-

Event data is replicated between nodes. This permits a cluster of brokers to both preserve and continue serving data when a broker fails.

- High availability

-

A cluster of event broker nodes enables clients to connect to other nodes in the case of a broker failure. This permits the clients to maintain full uptime.

- High-performance

-

Multiple broker nodes share the production and consumption load. In addition, each broker node must be highly performant to be able to handle hundreds of thousands of writes or reads per second.

Though there are different ways in which event data can be stored, replicated, and accessed behind the scenes of an event broker, they all generally provide the same mechanisms of storage and access to their clients.

Selecting an Event Broker

While this book will make frequent reference to Apache Kafka as an example, there are other event brokers that could make suitable selections. Instead of comparing technologies directly, consider the following factors closely when selecting your event broker.

Support tooling

Support tools are essential for effectively developing event-driven microservices. Many of these tools are bound to the implementation of the event broker itself. Some of these include:

-

Browsing event stream records

-

Browsing schema data

-

Quotas, access control, and topic management

-

Monitoring, throughput, and lag measurements

See [Link to Come] for more information regarding tooling you may need.

Hosted services

Hosted services allow you to outsource the creation and management of your event broker.-

Do hosted solutions exist?

-

Will you purchase a hosted solution or host it internally?

-

Does the hosting agent provide monitoring, scaling, disaster recovery, replication, and multizone deployments?

-

Does it couple you to a single specific service provider?

-

Are there professional support services available?

Client libraries and processing frameworks

There are multiple event broker implementations to select from, each of which has varying levels of client support. It is important that your commonly used languages and tools work well with the client libraries.-

Do client libraries and frameworks exist in the required languages?

-

Will you be able to build the libraries if they do not exist?

-

Are you using commonly used frameworks or trying to roll your own?

Community support

Community support is an extremely important aspect of selecting an event broker. An open source and freely available project, such as Apache Kafka, is a particularly good example of an event broker with large community support.-

Is there online community support?

-

Is the technology mature and production-ready?

-

Is the technology commonly used across many organizations?

-

Is the technology attractive to prospective employees?

-

Will employees be excited to build with these technologies?

Indefinite and tiered storage

Indefinite storage lets you store events forever, provided you have the storage space to do so. Depending on the size of your event streams and the retention duration (e.g. indefinite), it may be preferable to store older data segments in slower but cheaper storage.

Tiered storage provides multiple layers of access performance, with a dedicated disk local to the event broker or its data-serving nodes providing the highest performance tier. Subsequent tiers can include options such as dedicated large-scale storage layer services (e.g., Amazon’s S3, Google Cloud Storage, and Azure Storage).

-

Is tiered storage automatically supported?

-

Can data be rolled into lower or higher tiers based on usage?

-

Can data be seamlessly retrieved from whichever tier it is stored in?

Summary

Event streams provide durable, replayable, and scalable data access. They can provide a full history of events, allowing your consumers to read whatever data they need via a single API. Every consumer is guaranteed an identical copy of the data, provided they read the stream as it was written.

Your event broker forms the core of your event-driven architectures. It’s responsible for hosting the event streams, and providing consistent, high-performance access to the underlying data. It’s responsible for durability, fault-tolerance, and scaling, to ensure that you can focus on building your services, not struggling with data access.

The producer service publishes a set of important business facts, broadcasting the data via the event stream to subscribed consumer services. The producer is no longer responsible for the varied query needs of all other services across the organization.

Consumers do not query the producer service for data, eliminating unnecessary point-to-point connections from your architecture. Previously a team may simply have written SQL queries or used request/response APIs to access data stored in a monolith’s database. In an event-driven architecture they instead access that data from an event stream, materializing and aggregating their own state for their own business needs.

The adoption of event-driven microservices enables the creation of services that use only the event broker to store and access data. While local copies of the events may certainly be used by the business logic of the microservice, the event broker remains the single source of truth for all data.

Chapter 2. Fundamentals of Event-Driven Microservices

An event-driven microservice, as introduced back in [Link to Come], is an application like any other. It requires the exact same type of compute, storage, and network resources as any other application. It also requires a place to store the source code, tools to build and deploy the application, and monitoring and logging to ensure healthy operation. As an event-driven application, it reads events from a stream (or streams), does work based on those events, and then outputs results - in the form of new events, API calls, or other forms of work.

[Link to Come] briefly introduced the main benefits of event-driven microservices. In this chapter, we’ll cover the fundamentals of event-driven microservices, exploring their roles and responsibilities, along with the requirements, rules, and recommendations for building healthy applications.

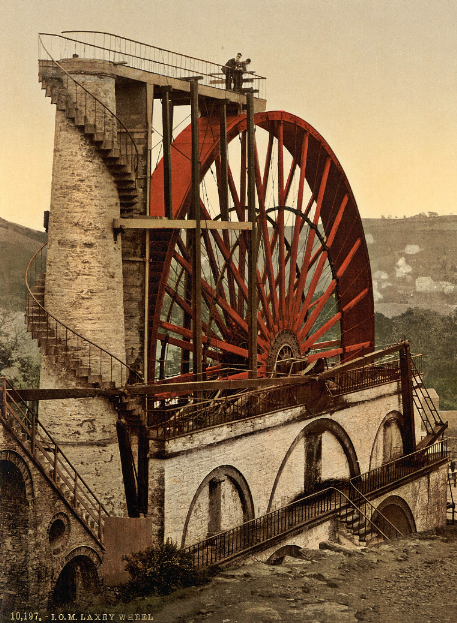

The Basics

Event-Driven means that the events drive the business logic, just as water from a stream turns the water wheel of a mill (Figure 2-1). Event-driven applications, be they micro or macro, typically only do work when there are events coming through it (or a timer expires - also an event). Otherwise, they sit idle until there are new events to process.

Figure 2-1. The water stream powers the wheel, as event streams power the microservice Source - wikimedia

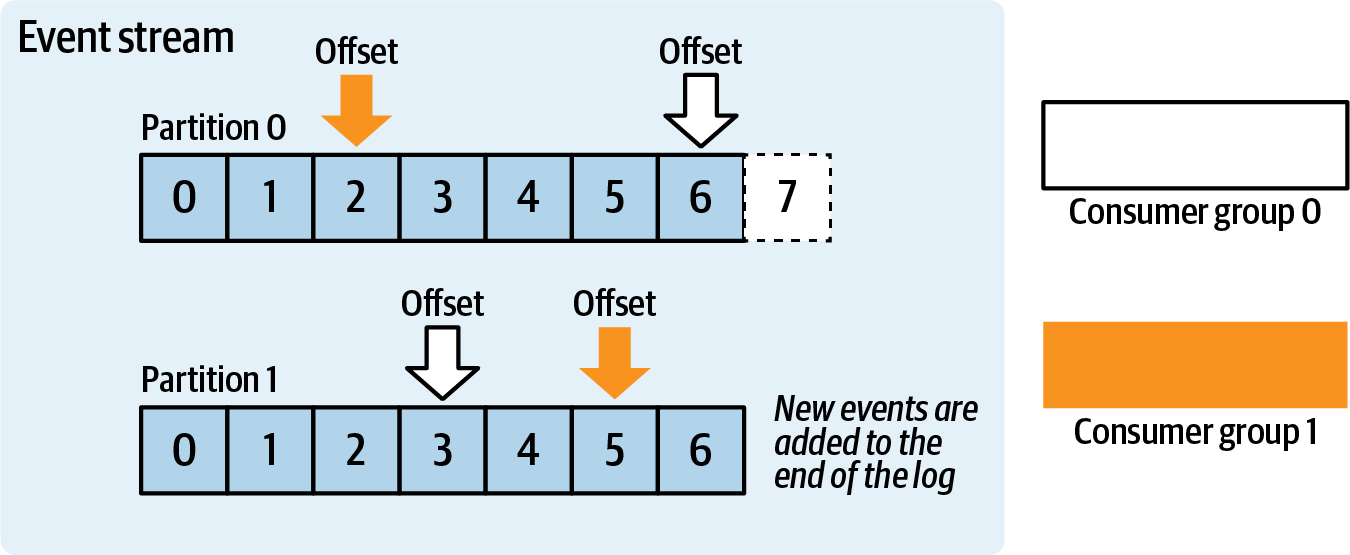

The consumer microservice reads events from the stream. Each consumer is responsible for updating its own pointers to previously read indices within the event stream. This index, known as the offset, is the measurement of the current event from the beginning of the event stream. Offsets permit multiple consumers to consume and track their progress independently of one another, as shown in Figure 2-2.

Figure 2-2. Consumer groups and their per-partition offsets

“So what makes up an event-driven microservice?” you may ask. Perhaps the best thing to do is just look at a few examples first, and work backwards from there. Let’s take a look at a few examples of microservice implementations.

The Basic Producer/Consumer

This example is entitled a “basic producer/consumer” because that’s all it really is - the producer produces events, the consumer consumes events, and you, the software dev, must write all the operations to stitch together the business logic. We’ll go more into this pattern in chapter [Link to Come], but for now we’ll start with a basic Python microservice example.

This service consumes events from a Kafka event broker (topic name “input_topic”, tallies a sum, and emits the updated total to a new event stream if the value exceeds 1000.

The python application creates the KafkaConsumer (1) and KafkaProcucer (2). The aptly named KafkaConsumer will read events from the specified topic, convert the events from serialized bytes to objects that application understands - in this case, plain text keys and JSON value. For a refresher on keys and values, you can look back to “The Structure of an Event”.

fromkafkaimportKafkaConsumer,KafkaProducerimportjsonimporttime# 1) Initialize Kafka consumerconsumer=KafkaConsumer('input_topic_name',bootstrap_servers=['localhost:9092'],value_deserializer=lambdax:json.loads(x.decode('utf-8')),key_deserializer=lambdax:x.decode('utf-8'),group_id='ch03_python_example_consumer_group_name',auto_offset_reset='earliest')# 2) Initialize Kafka producerproducer=KafkaProducer(bootstrap_servers=['localhost:9092'],value_serializer=lambdax:json.dumps(x).encode('utf-8'),key_serializer=lambdax:x.encode('utf-8'))# 3) Connect to the state store (A simple dictionary to keep track of the sums per key)key_sums={}# 4) Polling loopwhileTrue:# 5 Poll for new eventsevent_batch=consumer.poll(timeout_ms=1000)# 6 Process each partition's eventsforpartition_batchinevent_batch.values():foreventinpartition_batch:key=event.keynumber=event.value['number']# 7) Update sum for this keyifkeynotinkey_sums:key_sums[key]=0key_sums[key]+=number# 8) Check if sum exceeds 1000ifkey_sums[key]>1000:# Prepare and send new eventoutput_event={'key':key,'total_sum':key_sums[key]}# 9) Write to topic named "key_sums"producer.send('key_sums',key=key,value=output_event)# 10) Flushes the events to the brokerproducer.flush()

Next, the code creates a simple state store (3). You will most likely want to use something other than an in-memory dictionary, but this is a simple example and you could swap this for a RDBMS, a fully managed key-value store, or some other durable state store.

Tip

Choose the state store that’s most suitable for your microservice’s use case. Some are best served with high performance key-value stores, while other use cases are best served via RDBMS, graph, or document, for example.

The fourth step is to enter an endless loop (4) that polls the input topic for a batch of events (5) and processes each event on a per-partition basis (6). For each event, the business logic updates the key sum (7), and if it’s > 1000 (8), then it creates an event with the sum to write to the output topic key_sums (9 and 10).

This is a very simple application. But it showcases the key components common to the vast majority of event-driven microservices: event stream consumers, producers, state stores, and a continual processing loop driven by the arrival of new events on the input streams. The business logic is embedded within the processing loop, and though this example is very simple, there are far more powerful and complex operations that we can perform.

Let’s take a look at a few more examples.

The Stream-Processing Event-Driven Application

The Stream-Processing event-driven application is built using a stream-processing framework. Popular examples include Apache Kafka Streams, Apache Flink, Apache Spark, and Akka Streams. Some older examples that I cited in the first edition aren’t as common anymore, but included Apache Storm and Apache Samza.

The streaming frameworks provide a tighter integration with the event broker, and reduce the amount of overhead you have to deal with when building your EDM. Additionally, they tend to provide more powerful computations with higher level constructs, such as letting you join streams and materialized tables with very little effort on your part.

A key differentiator of stream-processing frameworks from the basic producer/consumer is that stream-processors require either a separate standalone cluster of its own (such as in the case of Flink and Spark), or a tight implementation with a specific event broker (such as the case of Kafka Streams).

Flink and Spark (and others like it) use their own proprietary processing clusters to manage state, scaling, durability, and the routing of data internally. Kafka Streams, on the other hand, relies on just the Kafka cluster to store their own durable state, provide topic repartitioning, and provide application scaling functionality.

Future chapters (lightweight, heavyweight) will cover both these types of frameworks in more detail. For now, let’s turn to a practical (and concatenated) example using Apache Flink.

// 1) TableEnvironment manages access and usage of the tables in the Flink applicationTableEnvironmenttableEnv=TableEnvironment.create(EnvironmentSettings.inStreamingMode());// 2) Create the output table for joined results, connecting to a Kafka broker.tableEnv.executeSql("CREATE TABLE PurchasesInnerJoinOrders ("+" order_id STRING,"+" /* Add all columns you want to output here */"+") WITH ("+" 'connector' = 'kafka',"+" 'topic' = 'PurchasesInnerJoinOrders',"+" 'properties.bootstrap.servers' = 'localhost:9092',"")");//Other table definitions removed for brevity. They are very similar.// 3) Reference the Sales and Purchase tables (assumes they are already created as tables in the catalog)Tablesales=tableEnv.from("Sales");Tablepurchases=tableEnv.from("Purchase");// 4) Perform inner join between Sales and Purchase tables on order_idTableresult=sales.join(purchases).where($("Sales.order_id").isEqual($("Purchase.order_id")));// 5) Insert the joined results into the output Kafka topicresult.executeInsert("PurchasesInnerJoinOrders");

This is a fairly terse application, but it packs a lot of power. First (1), we create the tableEnv connection that will manage our table environment work. Next, we declare the output table (2), which is connected to the Kafka topic PurchasesInnerJoinOrders. You’ll only have to create this once. I’ve omitted the other declarations for brevity.

Third (3), you create references to the Sales and Purchases tables so that you can use them in your application. These tables are materialized streams, as covered in “Materializing State from Entity Events”.

Fourth (4), the code declares the transformations to perform on the events populating the materialized tables. These few lines perform a lot of important work. Not only does it perform the operations of joining these two tables together, but it also handles fault-tolerance, load balancing, in-order processing, and big-data scale loads. It can also easily join streams and tables that are partitioned and keyed completely differently,something that you are likely to encounter on your own.

Fifth (5) and finally, the application writes the data to the output table. The machinations of the Flink streaming framework will handle the rest for committing the data to the Kafka topic.

Streaming frameworks provide very powerful capabilities, but typically require a larger upfront investment into supporting architecture. They also typically only provide limited language support (Python and JVM being the most popular), though some progress has on supporting other languages since the first edition of this book has been published. In fact, SQL (or SQL-like) languages have been possible the fastest growing of the bunch. Let’s take a look at those next.

The “Streaming SQL” Query

“Should your microservice actually just be a SQL query?”

It’s a good question, particularly with the rise of SQL streaming as managed services. The very same things that make SQL popular in the database world make it popular in the streaming world. It’s declarative nature means that you just declare the results you’re looking for. You don’t have to specify how to get it done, as that’s the job of the underlying processing engine.

Additionally, SQL lets you write very clear and concise statements about what it is you want to do with your data - in this case, the event stream. Here’s an example of the very same Flink application, but written using Flink SQL.

--Assumes we have already declares the materialized input tables and the output tableINSERTINTOPurchasesInnerJoinOrdersSELECT*FROMSalessINNERJOINPurchasepONs.order_id=p.order_id;

That’s it. Simple but powerful. This Streaming SQL query will run indefinitely, acting as a stand-alone microservice unto itself.

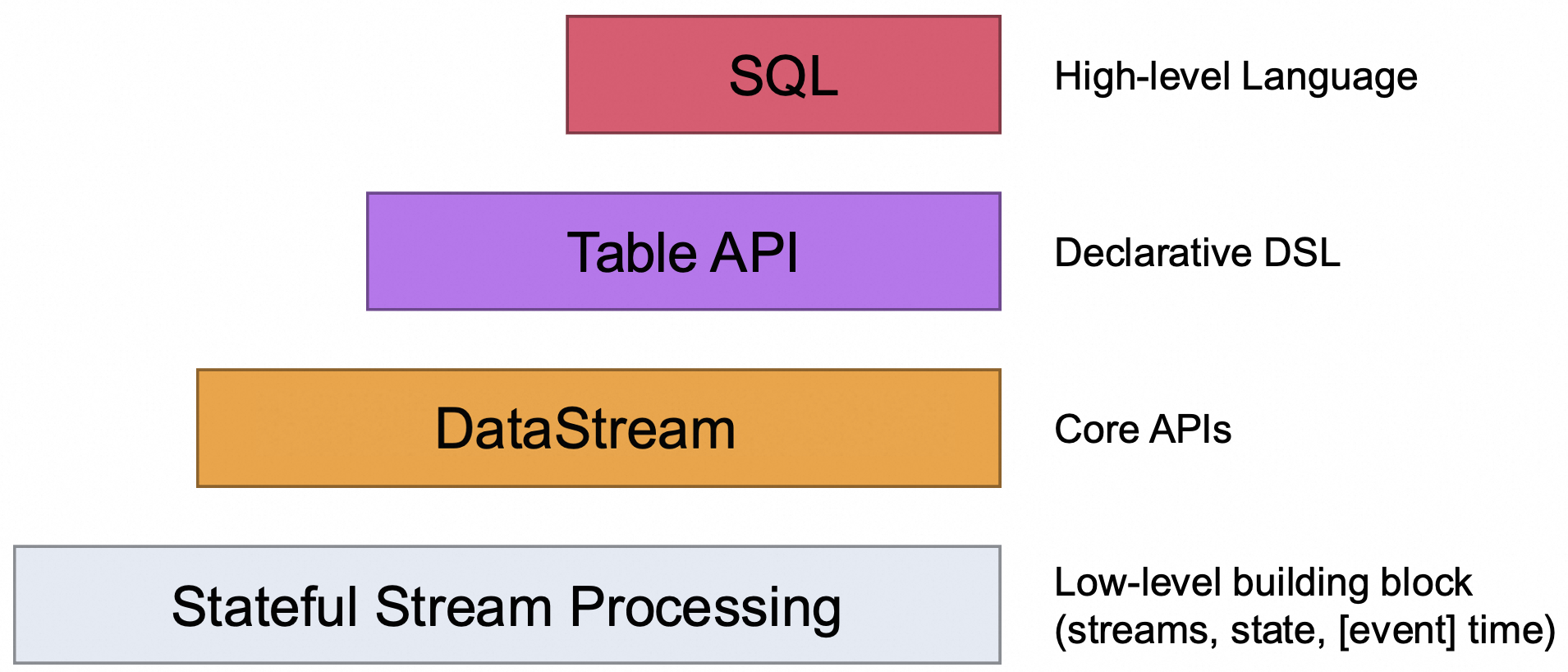

Streaming SQL is not yet standardized. There are many different flavors and types, and so you’ll have to do your own due diligence to find out what is and what isn’t supported per streaming framework. Additionally, streaming SQL tends to require a robust lower-level set of APIs to function beyond just toy examples. The Flink project, for example in Figure 2-3, has several layers of APIs, each which depends on the ones below it

Figure 2-3. The four levels of Flink APIs - Source - apache.org

In short, keep your eyes open for opportunities to use SQL within your microservices. It can save you a ton of time and effort, and let you get on with other work. We’ll look at streaming SQL in a bit more detail in the [Link to Come].

The Legacy Application

Legacy applications typically aren’t written with event-driven processing in mind. They’re usually old but important systems that serve critical business functions, but that aren’t under active development anymore. Changes to these services are rare, and are only performed when absolutely necessary. They’re also often the gatekeepers to important business data, siloed away inside the database or file stores of the system.

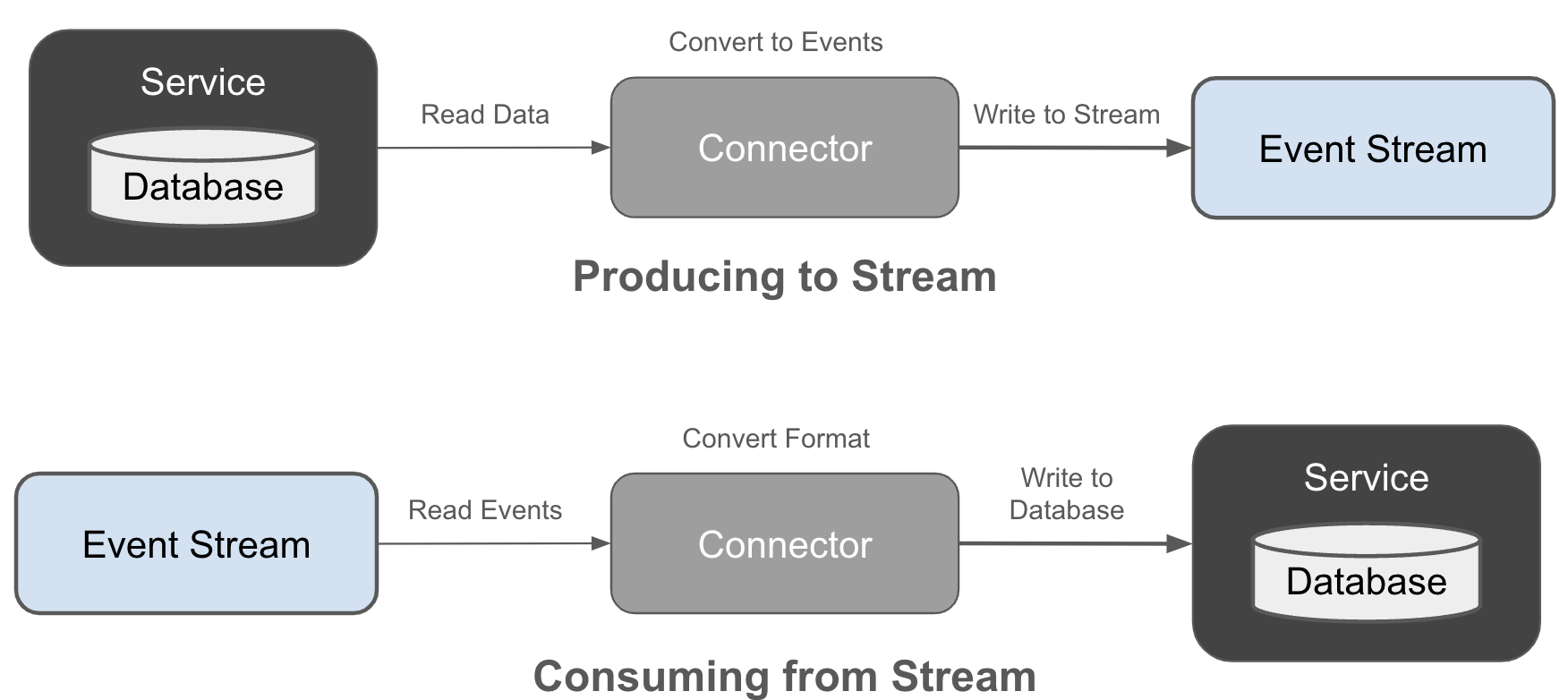

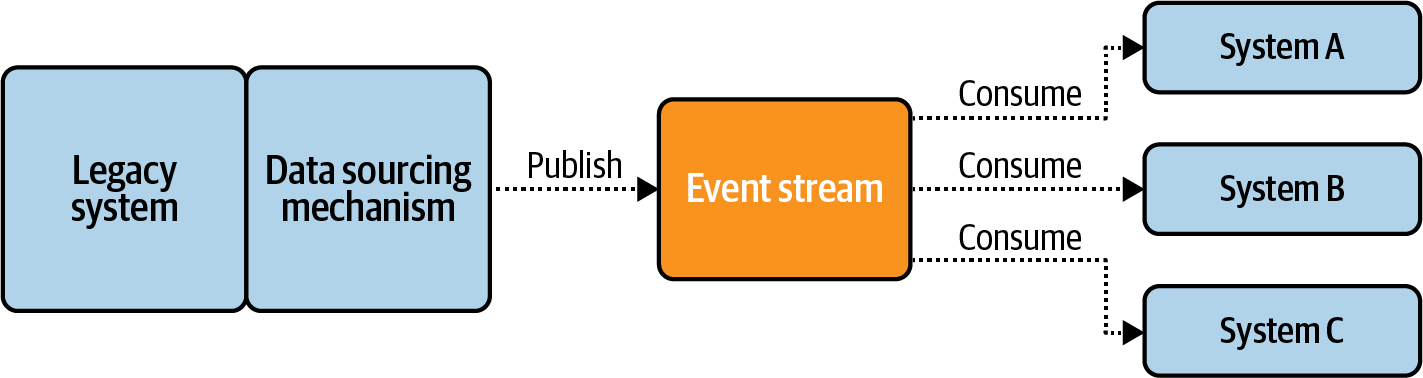

The legacy application is basically a rigid structure that you’re unlikely to be able to change. But you can still integrate it into your overarching event-driven architecture through the use of connectors, as shown in Figure 2-4.

Figure 2-4. Producing and consuming event streams with connectors

Connectors can read events from a source system or database, convert the data to events, and write it into an event stream. Similarly, connectors can also read events from a stream, convert them into a suitable format, and write them to a legacy system’s API or database. They provide the means for integrating these existing applications into your event-driven architecture without having to redesign the whole system as a native event-driven architecture.

Connectors enable you to get started with event streams without having to reinvent your entire architecture. They make it easy to get data into streams, so that you can start getting value from your event-driven microservices as soon as possible. With that, we’ll cover connectors in more detail in [Link to Come].

Now, these four services may all need to work together with one another. Unlike a single macro-service (e.g. a singular monolith), microservices are, by definition, a collection of services that rely on one another. Although we strive for decoupled and asynchronous communication, at the end of the day, each of these services may be (and often are) dependent on the work done by the other services. Thus we have some sort of network relationship… segway into Responsibilities

Event-Driven Microservice Responsibilities

- Service Boundaries and Scope

-

A well-defined set of boundaries. What is this application responsible for? And what is it not? The latter becomes more important when you have multiple microservices working together to fulfil a more complicated workflow, where it can be a bit difficult to discern the responsibilities of each service. By mapping microservices as cleanly as possible to bounded contexts, we can avoid much of the guesswork and ambiguity that may otherwise crop up.

- Scalability

-

The microservice is responsible for ensuring that it is scalable. Specifically, it must be written in such a way that allows it to scale horizontally (more instances) or vertically (a more powerful instance), depending on its requirements. EDM frameworks that provide scaling out of the box tend to have far greater appeal due to the seamless built-in scaling capabilities. The underlying processing power, however, is something that is provided by the microservice platform. We’ll talk about the more towards the end of this chapter.

- State Management

-

The microservice is solely responsible for the creates, reads, updates, and deletes made to its data store. Any operations that modify the state in the microservice remain entirely within its boundary of control. Any problems with the state store, such as running out of disk or failing to test applications changes also fall within the microservice’s problem space. [Link to Come] goes into greater detail on how to build and manage state for event-driven microservices.

- Track Stream Input Progress

-

Each microservice must keep track of its progress in reading the input event streams. For example, Apache Kafka tracks this progress using a

consumer groupone per logical microservice. Consumer groups are also used by many other leading event brokers, though a consumer can still choose to manually manipulate its own offsets (say to replay some records) or store them elsewhere, such as in their own data store. - Failure Recovery

-

Microservices are responsible for ensuring that they can get themselves back to a healthy state after a failure. The frameworks that we’ll rely on to run our microservices are usually quite good at bringing dead services back to life, but our services will be responsible for restoring state, restoring the input event stream progress, and picking back up where they left off before the failure.

The application must also take into consideration its stored state in relation to its stream input progress. In the case of a crash, the consuming service will resume from its last known good offsets, which could mean duplicate processing and duplicate state store updates.

- The Single Writer Topic Principle

-

Each event stream has one and only one producing microservice. This microservice is the owner of each event produced to that stream. This allows for the authoritative source of truth to always be known for any given event, by permitting the tracing of data lineage through the system. Access control mechanisms, as discussed in [Link to Come], should be used to enforce ownership and write boundaries.

- Partitioning and Event Keys

-

The microservice is responsible for choosing the output record’s primary key (see “The Structure of an Event”), and is also responsible for determining which partition to send that record to. Records of the same key typically go to the same partition, though you can choose other strategies (e.g. round-robin, random, or custom). Ultimately, it is your microservice that is responsible for selecting which partition to write the event to.

- Event Schema Definitions and Data Contracts

-

Just as it’s your microservice’s choice for choosing the record key, it’s also its choice for choosing the schema format for writing the event. For example, do you write

product_idas aString? Or do you write it as aInteger? Chapter [Link to Come] covers this in far more detail, but for now, plan to use a well-defined Avro, Protobuf, or JSON schema to write your records. It will make your event streams much easier to use, provide clarity for your consumers, and enable a much healthier event-driven ecosystem.

A microservice should also be reasonably sized. Does that sound ambiguous to you? Well read on.

How small should a microservice be?

First up, the goal of a microservice architecture isn’t to make as many as possible. You won’t win any awards for having the highest service count, nor would you even find the experience rewarding. In fact, you’d probably end up writing a blog about how you made a 1000 microservices and everything was awful.

The reality is that every service you build, micro or otherwise, incurs overhead. However, we also want to avoid the pains that come with a single monolith. Where’s the balance?

A microservice should be manageable by a single team, and the team should be able to have others within it work on the service as needed. How big is that team? The two-pizza team is a popular measurement, though it can be a bit of an unreliable guide depending on how hungry your developers are.

A single developer on this team should be able to fit the space of duties that the service has in their own head. A new developer coming to the microservice should be able to figure out the duties of that service, and how it accomplishes those duties, in just a day or two.

Ultimately, the real concern is that the service serves a particular bounded context and satisfies a business need. The size, or how micro it really is, comes as a secondary concern. You may end up with microservices that push the upper boundary of what I’ve prescribed here, and that’s okay - the important part is that you can identify the specific business concern it meets, the boundaries of the service, and be able to maintain a good mental list of what the service should do and should not.

Here are a couple of quick tips:

-

Only build as many services as are necessary. More is not better.

-

One person should be able to understand the whole service.

-

Your team will need to be responsible for owning and maintaining the service and the output event streams.

-

Look to add functionality to an existing service first. It reduces your per-application overhead, as we’ll see next in the next section.

-

For the microservices you do build, focus on building modular components. You may find that as your business develops that you need to break off a module to convert to its own microservice.

In the next section we’ll take a look at how to manage microservices, including how to manage them at scale.

Managing Microservices at Scale

Managing microservices can become increasingly difficult as the quantity of services grows. Each microservice requires specific compute resources, data stores, configurations, environment variables, and a whole host of other microservice-specific properties. Each microservice must also be manageable and deployable by the team that owns it. Containerization and virtualization, along with their associated management systems, are common ways to achieve this. Both options allow individual teams to customize the requirements of their microservices through a single unit of deployability.Putting Microservices into Containers

Containers, as popularized by Docker, isolate applications from one another. Containers leverage the existing host operating system via a shared kernel model. This provides basic separation between containers, while the container itself isolates environment variables, libraries, and other dependencies. Containers provide most of the benefits of a virtual machine (covered next) at a fraction of the cost, with fast startup times and low resource overhead.Containers’ shared operating system approach does have some tradeoffs. Containerized applications must be able to run on the host OS. If an application requires a specialized OS, then an independent host will need to be set up. Security is one of the major concerns, since containers share access to the host machine’s OS. A vulnerability in the kernel can put all the containers on that host at risk. With friendly workloads this is unlikely to be a problem, but current shared tenancy models in cloud computing are beginning to make it a bigger consideration.

Putting Microservices into Virtual Machines

Virtual machines (VMs) address some of the shortcomings of containers, though their adoption has been slower. Traditional VMs provide full isolation with a self-contained OS and virtualized hardware specified for each instance. Although this alternative provides higher security than containers, it has historically been much more expensive. Each VM has higher overhead costs compared to containers, with slower startup times and larger system footprints.Tip

Efforts are under way to make VMs cheaper and more efficient. Current initiatives include Google’s gVisor, Amazon’s Firecracker, and Kata Containers, to mention just a few. As these technologies improve, VMs will become a much more competitive alternative to containers for your microservice needs. It is worth keeping an eye on this domain should your needs be driven by security-first requirements.

Managing Containers and Virtual Machines

Containers and VMs are managed through a variety of purpose-built software known as container management systems (CMS). These control container deployment, resource allocation, and integration with the underlying compute resources. Popular and commonly used CMSes include Kubernetes, Docker Engine, Mesos Marathon, Amazon ECS, and Nomad.Microservices must be able to scale up and down depending on changing workloads, service-level agreements (SLAs), and performance requirements. Vertical scaling must be supported, in which compute resources such as CPU, memory, and disk are increased or decreased on each microservice instance. Horizontal scaling must also be supported, with new instances added or removed.

Each microservice should be deployed as a single unit. For many microservices, a single executable is all that is needed to perform its business requirements, and it can be deployed within a single container. Other microservices may be more complex, requiring coordination between multiple containers and external data stores. This is where something like Kubernetes’s pod concept comes into play, allowing for multiple containers to be deployed and reverted as a single action. Kubernetes also allows for single-run operations; for example, database migrations can be run during the execution of the single deployable.

VM management is supported by a number of implementations, but is currently more limited than container management. Kubernetes and Docker Engine support Google’s gVisor and Kata Containers, while Amazon’s platform supports AWS Firecracker. The lines between containers and VMs will continue to blur as development continues. Make sure that the CMS you select will handle the containers and VMs that you require of it.Tip

There are rich sets of resources available for Kubernetes, Docker, Mesos, Amazon ECS, and Nomad. The information they provide goes far beyond what I can present here. I encourage you to look into these materials for more information.

Paying the Microservice Tax

The microservice tax is the sum of costs, including financial, manpower, and opportunity, associated with implementing the tools, platforms, and components of a microservice architecture.The tax includes the costs of managing, deploying, and operating the event broker, CMS, deployment pipelines, monitoring solutions, and logging services. These expenses are unavoidable and are paid either centrally by the organization or independently by each team implementing microservices. The former results in a scalable, simplified, and unified framework for developing microservices, while the latter results in excessive overhead, duplicate solutions, fragmented tooling, and unsustainable growth.

Paying the microservice tax is not a trivial matter, and it is one of the largest impediments to getting started with event-driven microservices. Small organizations be best to stick with an architecture that better suits their business needs, such as a modular monolith, and only expand into microservices once their business runs into scaling and growth issues.

The good news is that the tax is not an all-or-nothing thing. You can invest in parts of your overall event-driven microservices platform as a product all its own, with incremental additions and iterative improvements.

Fortunately, self-hosted, hosted, and fully managed services are available for you to choose from. The microservice tax is being steadily reduced with new integrations between CMSes, event brokers, and other commonly needed tools.

Cloud services are worth an explicit mention for greatly reducing the cost of the Microservices tax. Cloud services and fully managed offerings have significantly improved since the first version of this book was released in 2020, and show no sign of abating. For example, you can easily find fully managed CMSes, event brokers, monitoring solutions, data catalogs, and integration/deployment pipeline services without having to run any of them yourself. It is much easier to get started with using cloud services for your microservice architecture than building your own, as it lets you experiment and trial your services before committing significant resources.

Summary

Event-driven microservices are applications like any other, relying on incoming events to drive their business logic. They can be written in many different languages, though the functionality you have available to you will vary accordingly. Basic producer/consumer microservices may be written in a wide range of languages, while purpose-built streaming frameworks may only support one or two languages. SQL queries and connectors also remain options, but their use requires further integration with application source code than what was covered in this chapter.

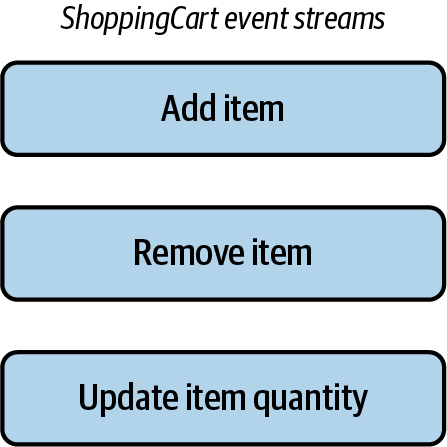

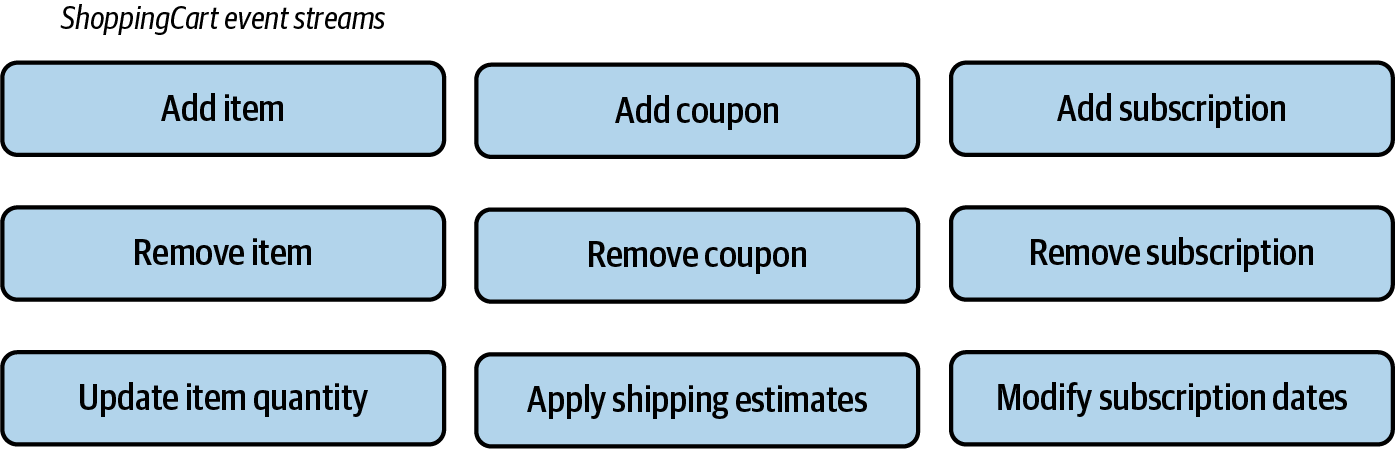

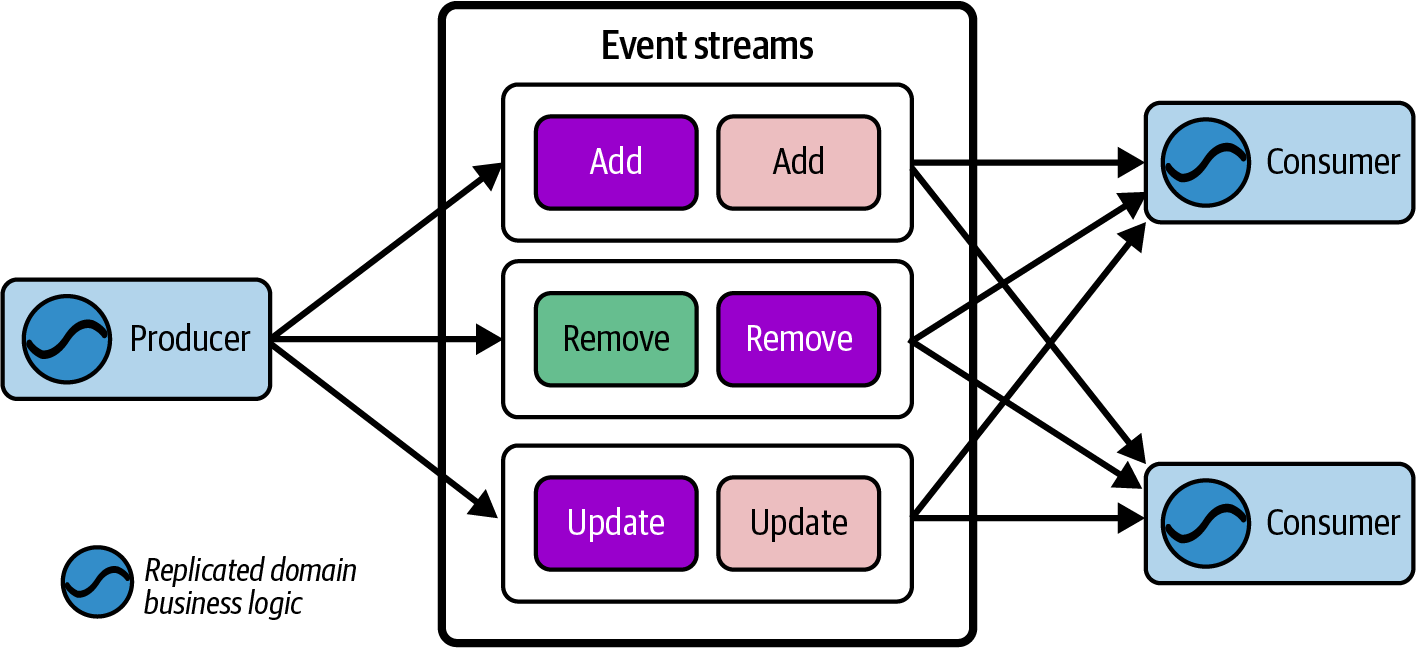

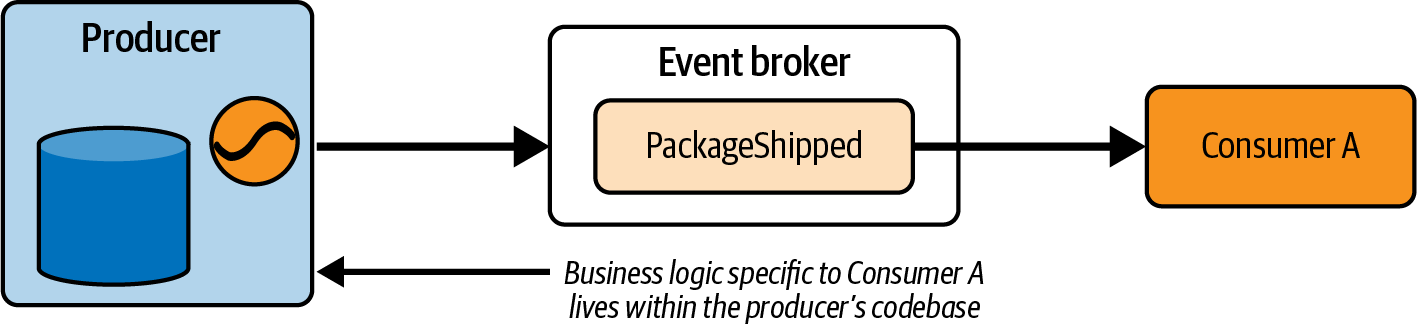

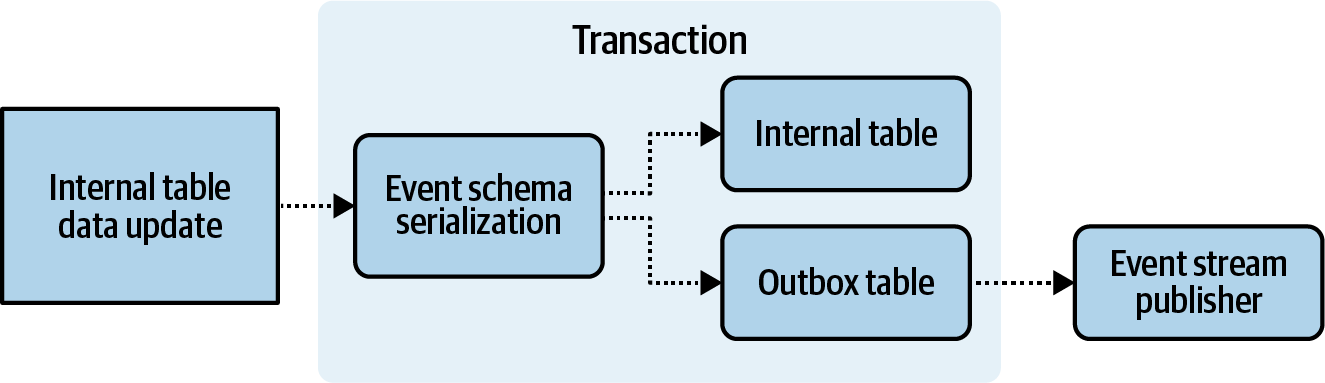

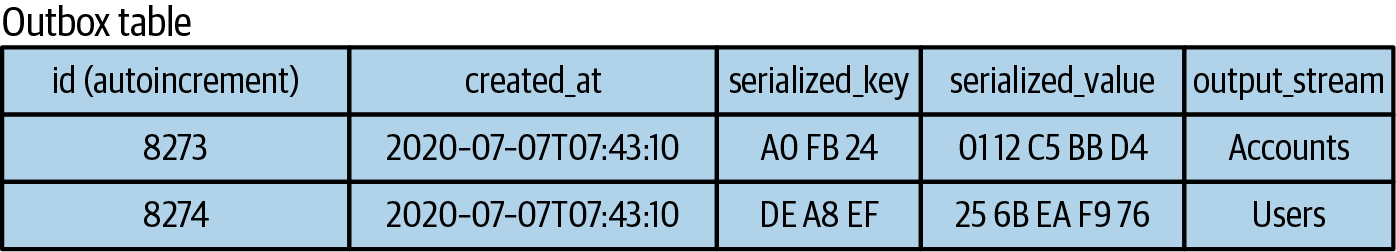

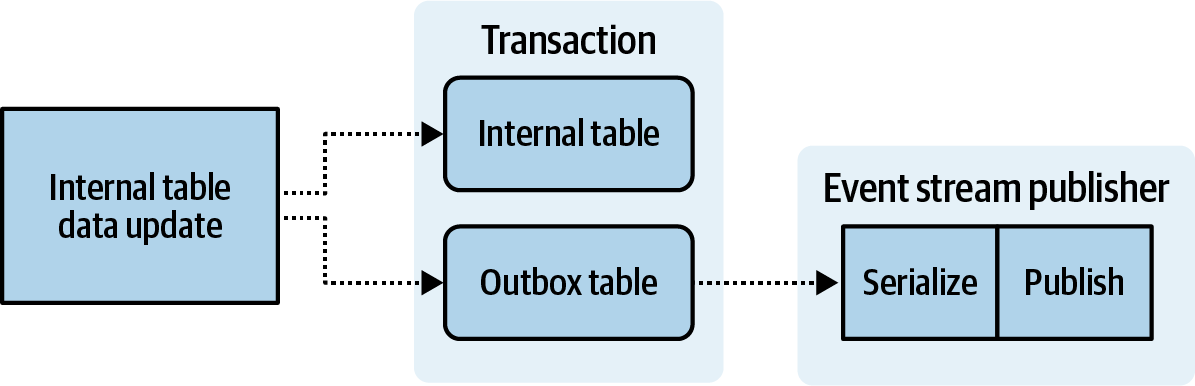

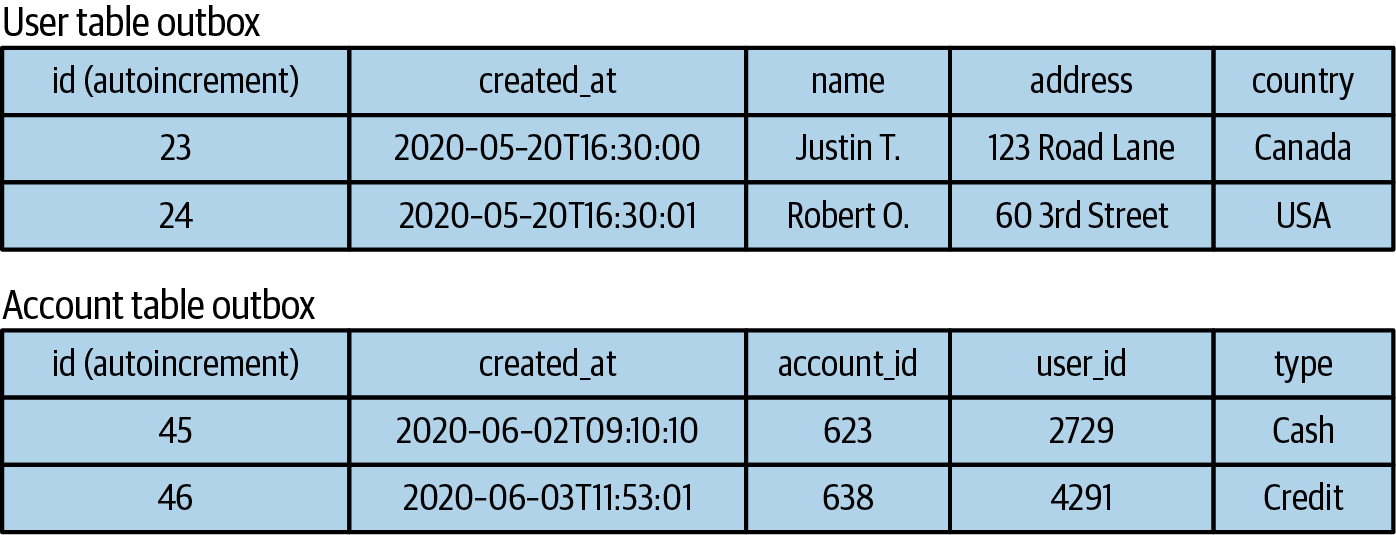

Microservices have a host of responsibilities, including managing their own code, state, and runtime properties. The service creators have the freedom to choose the technologies best suited to solving the business problem, though they of course need to ensure that the service can be maintained, updated, and debugged by others. Microservices are typically deployed in containers or using virtual machines, relying on container/VM management systems to help monitor and manage their life cycles.